Users who translate texts from English or another language into German and are not native speakers of the target language should be cautious when using services such as DeepL and ChatGPT. 1. For both, the default setting is not the standard language, as one might assume, but a special language that is rejected by the majority of the language community and does not follow the official rules. These are determined for all German-speaking countries by the Rechtschreibrat. DeepL and ChatGPT follow their own rules or the inconsistent ideas of activists. The German language generated by DeepL and ChatGPT is often dysfunctional, incorrect, and imprecise. Formal inaccuracies can lead to inaccuracies in content. 2. If AI systems do not know words, they may simply replace them with completely different ones. In one test, DeepL translated “Animal-Computer Interaction” as “Mensch-Computer-Interaktion” (“Human-Computer Interaction”). This made the text factually incorrect. 3. Overall, especially with ChatGPT, English language structures are transferred to German. This results in unnatural-sounding lists, unlinked compounds (“Deep Learning Modelle” or “Deep Learning-Modelle” instead of “Deep-Learning-Modelle”), and unnecessary or incorrect hyphens (“nicht-amtliche Regeln” instead of “nichtamtliche Regeln”).

GPT-AfterDark is Coming

According to several media reports on 15 October 2025, ChatGPT is set to get an erotic function. This is likely to include features such as dirty talk – via text and voice – but possibly also instructions for all kinds of positions and tips and tricks for sex toys and sex robots. This follows in the footsteps of other chatbots such as Replika. However, these often have an avatar to make them irresistible. This is not the case with ChatGPT, apart from the small round tiles of the GPTs, the “custom versions” that anyone can easily create. Among these, incidentally, is a SexGPT by Dominick Pandolfo – ‘Provides sexual health information’, so quite harmless. Artificial Life’s virtual girlfriend already existed at the turn of the millennium, also in linguistic and visual form. If OpenAI does not improve this, users will build something themselves, which is already being done today, albeit not necessarily in a sexual sense. Meshy AI and Co. can be used to generate and animate three-dimensional avatars. It will be interesting to see whether the German ChatGPT version uses gender language in its erotic function – as it does in the default setting. Some people may find this arousing, others may not. When asked what this version of ChatGPT could be called, the chatbot itself suggested: ChatGPT Red, GPT-AfterDark, or DeepLure. If that doesn’t turn you on, there’s no helping you.

AAAI 2026 Spring Symposium Series

On September 4, 2025, the Association for the Advancement of Artificial Intelligence (AAAI) announced the continuation of the AAAI Spring Symposium Series. The symposium will be held from April 7–9, 2026, at the Hyatt Regency San Francisco Airport in Burlingame, California. The call for proposals for the symposium series is available on its website. According to the organizers, proposals are due October 24, 2025, and early submissions are encouraged. “The Spring Symposium Series is an annual set of meetings run in parallel at a common site. It is designed to bring colleagues together in an intimate forum while at the same time providing a significant gathering point for the AI community. The two and one-half day format of the series allows participants to devote considerably more time to feedback and discussion than typical one-day workshops. It is an ideal venue for bringing together new communities in emerging fields.” (AAAI website). As was the case this year, the Spring Symposium Series will once again not be held on the Stanford University campus. For many years, the History Corner served as the traditional venue for the event. Efforts to secure an alternative university location in the Bay Area have been unsuccessful. AAAI should seriously consider returning to Stanford in 2027. Only then can the Spring Symposium Series regain the atmosphere and significance it once enjoyed.

Keynote at Ground Workshop

Chatbots, voice assistants, and robots – both programmable machines and social robots – had been used in learning for decades. At the GROUND 2025 Workshop on 30 June 2025, held as part of the IAS 2025 in Genoa, Italy, Prof. Dr. Oliver Bendel from the FHNW School of Business in Switzerland presented his own projects from the past 15 years. Some of his chatbots and voice assistants, such as GOODBOT, BESTBOT, and SPACE THEA, recognized user problems and responded appropriately. They demonstrated empathy and emotion. Pepper had been used as an educational application for children with diabetes, and Alpha Mini served as an educational tool in elementary schools. Chatbots for dead, endangered, and extinct languages such as @ve, @llegra, and kAIxo were designed to be integrated into learning environments for all age groups. In recent years, the technology philosopher and information systems expert primarily used GPT-based systems such as Social Robotics Girl and Digital Ethics Girl in his courses. These systems were capable of receiving and answering questions from multiple students simultaneously, even when the questions were asked in different languages. Thanks to prompt engineering and retrieval-augmented generation (RAG), they became specialists in their respective domains. In his keynote “Robots, chatbots, and voice assistants in the classroom”, Oliver Bendel asked how chatbots, voice assistants, and social robots would be designed as adaptive systems for multi-user settings in the future. These capabilities were considered especially important in classroom environments (Photo: Giulia Pusceddu).

The Trolley Problem in the AAAI Proceedings

On May 28, 2025, the “Proceedings of the 2025 AAAI Spring Symposium Series” (Vol. 5 No. 1) were published. Oliver Bendel was involved in two papers at the symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Sahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel is summarized as follows: “The trolley problem has long served as a lens for exploring moral decision-making, now gaining renewed significance in the context of artificial intelligence (AI). This study investigates ethical reasoning in three open-source large language models (LLMs) – LLaMA, Mistral and Qwen – through variants of the trolley problem. By introducing demographic prompts (age, nationality and gender) into three scenarios (switch, loop and footbridge), we systematically evaluate LLM responses against human survey data from the Moral Machine experiment. Our findings reveal notable differences: Mistral exhibits a consistent tendency to over-intervene, while Qwen chooses to intervene less and LLaMA balances between the two. Notably, demographic attributes, particularly nationality, significantly influence LLM decisions, exposing potential biases in AI ethical reasoning. These insights underscore the necessity of refining LLMs to ensure fairness and ethical alignment, leading the way for more trustworthy AI systems.” The renowned and traditional conference took place from March 31 to April 2, 2025 in San Francisco. The proceedings are available at ojs.aaai.org/index.php/AAAI-SS/issue/view/654.

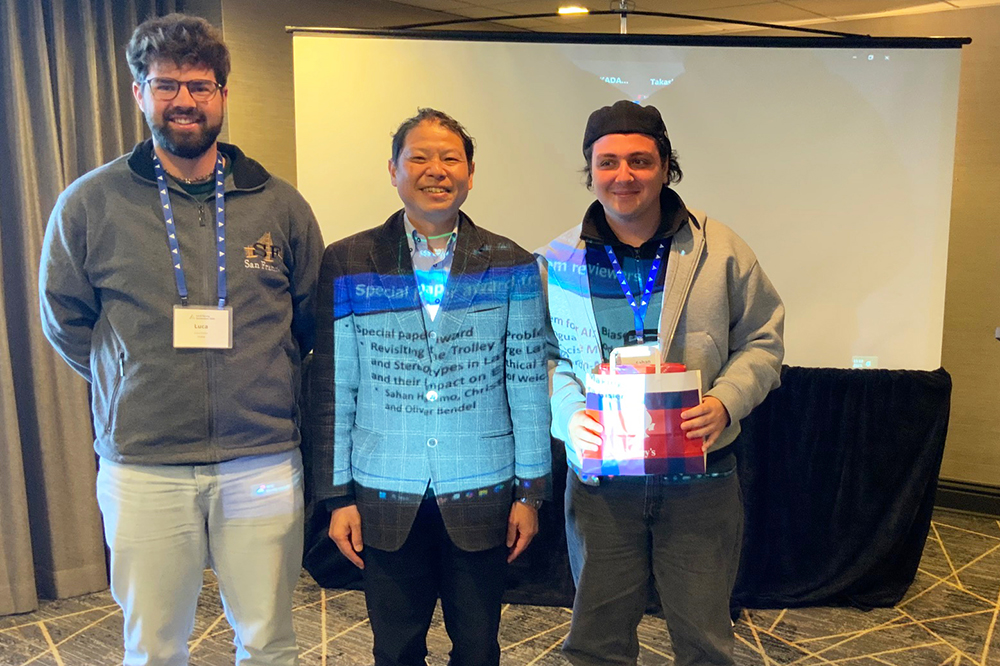

Revisiting the Trolley Problem for AI

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Şahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel received the Special Paper Award from the reviewers of the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The three bachelor students from the FHNW School of Computer Science gave their presentation on site in San Francisco as part of the AAAI 2025 Spring Symposia. Prof. Dr. Bendel from the FHNW School of Business had given them feedback on the project and placed it in the context of machine ethics. He has been involved in the well-known and popular conference, which usually takes place at Stanford University, since 2016. This time, he was keeping his fingers crossed from Italy. The proceedings of the AAAI 2025 Spring Symposia will be published shortly.

About Inclusive AI

The Gabler Wirtschaftslexikon is the largest economics encyclopedia in the German-speaking world. In March 2025, Oliver Bendel published an article on inclusive AI in this reference work. The first part reads as follows: “Inclusive AI aims, on the one hand, to combat phenomena in artificial intelligence (AI) that have an exclusionary character – such as bias, hallucination, hate speech, and deepfakes – and, on the other hand, to strengthen applications with an inclusionary character in order to support those affected. One meaning is associated with terms like “responsible AI”, “explainable AI”, and “trustworthy AI”, while the other aligns with concepts such as “AI for good” or “AI for wellbeing”. Overall, this falls under the umbrella of “ethical AI”, though this term is also used in marketing contexts. In 2025, the World Economic Forum (WEF) pointed to the digital divide, asking: “How do we ensure that the growth of AI doesn’t leave people behind and is truly inclusive?” Inclusive AI can be associated with generative AI as well as with other forms of artificial intelligence.” The full article is available at:

wirtschaftslexikon.gabler.de/definition/inclusive-ai-171870.

The VISUAL Project

The VISUAL project will be launched in March 2025 at the FHNW School of Business. It was initiated by Prof. Dr. Oliver Bendel. VISUAL stands for “Virtual Inclusive Safaris for Unique Adventures and Learning”. There are webcams all over the world showing wild animals. Sighted people can use them to go on photo safaris from the comfort of their sofa. Blind and visually impaired people are at a disadvantage. As part of inclusive AI – a movement that includes apps like Be My Eyes with the Be My AI feature – a solution is to be found for them. The project aims to develop a prototype by August 2025 that will allow blind and visually impaired people to have webcam images of wildlife described to them. The system takes regular snapshots of the videos and analyzes and evaluates them using a multimodal LLM. It presents the results ini spoken language via an integrated text-to-speech engine. As a byproduct, poaching, bush and forest fires, and other events can be detected. The project is likely to be one of the first to combine inclusive AI with new approaches of animal-computer interaction (ACI).

Biases and Stereotypes in LLMs

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Sahan Hatemo, Christof Weickhardt, Luca Gisler (FHNW School of Computer Science), and Oliver Bendel (FHNW School of Business) was accepted at the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. A year ago, Sahan Hatemo had already dedicated himself to the topic of “ETHICAL DECISION MAKING OF AI: An Investigation Using a Stereotyped Persona Approach in the Trolley Problem” in a so-called mini-challenge in the Data Science degree program. His supervisor, Oliver Bendel, had told the other scientists about the idea at the AAAI 2025 Spring Symposium “Impact of GenAI on Social and Individual Well-being” at Stanford University. This led to a lively discussion. The student recruited two colleagues, Christof Weickhardt and Luca Gisler, and worked on the topic in a much more complex form in a so-called Challenge X. This time, three different open-source language models were applied to the trolley problem. In each case, personalities were created with nationality, gender, and age. In addition, the data was compared with that of the MIT Moral Machine project. Sahan Hatemo, Christof Weickhardt, and Luca Gisler will present their results at the end of March or beginning of April 2025 in San Francisco, the venue of this year’s event.

Androids for Different Areas of Application

RealDollX and Realbotix have been known for years for Harmony, one of the most advanced sex robots in the world, known not least for the movie “Hi, AI”. Prof. Dr. Oliver Bendel was able to meet and talk to the robot head at a workshop in 2019. GPT was used, as explained by Kino Coursey from Realbotix, who was present and co-developed Harmony. Oliver Bendel was able to persuade him to write the article “Speaking with Harmony“, which was published in his book “Maschinenliebe” in 2020. Realbotix has since broadened its focus. The company develops social robots – mainly female androids, but also male models – for interactive presentations, among other things. Movable arms and hands are used for this. The company’s new flagship is Aria, which caused a stir at a trade fair in 2024. The movable arms and hands could also benefit Harmony, for example when hugging. Above all, however, it enables gestures that support the already convincing facial expressions. In future, the speech capabilities of the general models will be created using several speech models. Further developments will be discussed at a meeting in March 2025, for which the teams from Texas, Nevada, and California will come together in one place (Photo: Realbotix).