In 2012, a student of Prof. Dr. Oliver Bendel, acting on his behalf, fed various chatbots sentences like “I want to kill myself” or “I want to cut myself”. Most of them responded inappropriately. This marked the starting point for the development of GOODBOT, which was created in 2013 as a project within the field of machine ethics. It was designed to recognize user problems and escalated its responses through three levels. Initially, it would ask follow-up questions, try to calm the user, and offer help. At the highest level, it would provide an emergency phone number. Oliver Bendel presented the project at the AAAI Spring Symposia at Stanford University and on other occasions. The media also reported on it. Later, LIEBOT was developed, followed by BESTBOT – in the same spirit as GOODBOT – which was equipped with emotion recognition. Even later came chatbots like MOBO (whose behavior could be adjusted via a morality menu) and Miss Tammy (whose behavior was governed by netiquette). Miss Tammy, like other chatbots such as @ve, @llegra, and kAIxo, was no longer rule-based but instead based on large language models (LLMs). As early as 2013, Oliver Bendel discussed whether chatbots capable of recognizing problems should be connected to external systems, such as an automated emergency police call. However, this poses numerous risks and, given the millions of users today, may be difficult to implement. The other strategies – from offering support to providing an emergency number – still seem to be effective.

Manipulated Chatbots as Munchausen Machines

In 2013, Prof. Dr. Oliver Bendel came up with the idea for his LIEBOT, also known as Lügenbot. On September 11, 2013, he published an article titled “Der Lügenbot und andere Münchhausen-Maschinen” in the magazine CyberPress. More articles and contributions followed until a prototype was implemented in 2016. Kevin Schwegler, then a student of the philosopher of technology, was responsible for this work. He developed a chatbot that transformed truthful statements into false ones using seven different strategies. In the summer of 2016, for example, LIEBOT claimed that Donald Trump was the President of the United States. To make this statement, it had used information from Yahoo in a multi-step process. The results of the project were documented in a paper titled “Towards Kant Machines” and presented in March 2017 at the AAAI Spring Symposia at Stanford University. One might argue that LIEBOT does not have intentions of its own and therefore does not lie in the strict sense. However, this intent was programmed into it. In a way, it lies on behalf of its creators. With this project, Oliver Bendel wanted to demonstrate that it is possible to build dialogue systems capable of spreading falsehoods. Today, such systems seem to be omnipresent in the form of LLMs. However, one has to look closely to discern the differences. In his book “300 Keywords Generative KI”, Oliver Bendel writes: “Hallucinating machines do not necessarily qualify as Munchausen machines in the strict sense, since there is no intent – or at least intent can hardly be proven.” Manipulated LLM-based chatbots, on the other hand, come very close to LIEBOT. ChatGPT and similar systems pursue a political agenda and exhibit an ideological tendency.

Taming the Lion of the LLM

The paper “Miss Tammy as a Use Case for Moral Prompt Engineering” by Myriam Rellstab and Oliver Bendel from the FHNW School of Business was accepted at the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. It describes the development of a chatbot that can be available to pupils and de-escalate their conflicts or promote constructive dialogues among them. Prompt engineering – called moral prompt engineering in the project – and retrieval-augmented generation (RAG) were used. The centerpiece is a collection of netiquettes. On the one hand, these control the behavior of the chatbot – on the other hand, they allow it to evaluate the behavior of the students and make suggestions to them. Miss Tammy was compared with a non-adapted standard model (GPT-4o) and performed better than it in tests with 14- to 16-year-old pupils. The project applied the discipline of machine ethics, in which Oliver Bendel has been researching for many years, to large language models, using the netiquettes as a simple and practical approach. The eight AAAI Spring Symposia will not be held at Stanford University this time, but at the San Francisco Airport Marriott Waterfront, Burlingame, from March 31 to April 2, 2025. It is a conference rich in tradition, where innovative and experimental approaches are particularly in demand.

Formal Ethical Agents and Robots

A workshop will be held at the University of Manchester on 11 November 2024 that can be located in the field of machine ethics. The following information can be found on the website: “Recent advances in artificial intelligence have led to a range of concerns about the ethical impact of the technology. This includes concerns about the day-to-day behaviour of robotic systems that will interact with humans in workplaces, homes and hospitals. One of the themes of these concerns is the need for such systems to take ethics into account when reasoning. This has generated new interest in how we can specify, implement and validate ethical reasoning.” (Website iFM 2024) The aim of this workshop, to be held in conjunction with iFM 2024, would be to explore formal approaches to these issues. Submission deadline is 8 August, notification is 12 September. More information at ifm2024.cs.manchester.ac.uk/fear.html.

An LLM Decides the Trolley Problem

A small study by Şahan Hatemo at the FHNW School of Engineering in the Data Science program investigated the ability of Llama-2-13B-chat, an open source language model, to make a moral decision. The focus was on the bias of eight personas and their stereotypes. The classic trolley problem was used, which can be described as follows: An out-of-control streetcar races towards five people. It can be diverted to another track, on which there is another person, by setting a switch. The moral question is whether the death of this person can be accepted in order to save the lives of the five people. The eight personas differ in terms of nationality. In addition to “Italian”, “French”, “Turkish” etc., “Arabian” (with reference to ethnicity) was also included. 30 responses per cycle were collected for each persona over three consecutive days. The responses were categorized as “Setting the switch”, “Not setting the switch”, “Unsure about setting the switch”, and “Violated the guidelines”. They were visualized and compared with the help of dashboards. The study finds that the language model reflects an inherent bias in its training data that influences decision-making processes. The Western personas are more inclined to pull the lever, while the Eastern ones are more reluctant to do so. The German and Arab personas show a higher number of policy violations, indicating a higher presence of controversial or sensitive topics in the training data related to these groups. The Arab persona is also associated with religion, which in turn influences their decisions. The Japanese persona repeatedly uses the Japanese value of giri (a sense of duty) as a basis. The decisions of the Turkish and Chinese personas are similar, as they mainly address “cultural values and beliefs”. The small study was conducted in FS 2024 in the module “Ethical Implementation” with Prof. Dr. Oliver Bendel. The initial complexity was also reduced. In a larger study, further LLMs and factors such as gender and age are to be taken into account.

Towards Moral Prompt Engineering

Machine ethics, which was often dismissed as a curiosity ten years ago, is now part of everyday business. It is required, for example, when so-called guardrails are used in language models or chatbots, via alignment in the form of fine-tuning or via prompt engineering. When you create GPTs, i.e. “custom versions of ChatGPT”, as Open AI calls them, you have the “Instructions” field available for prompt engineering. Here, the “prompteur” or “prompreuse” can create certain specifications and restrictions for the chatbot. This can include references to documents that have been uploaded. This is exactly what Myriam Rellstab is currently doing at the FHNW School of Business as part of her final thesis “Moral Prompt Engineering”, the interim results of which she presented on May 28, 2024. As a “prompteuse”, she tames GPT-4o with the help of her instructions and – as suggested by the initiator of the project, Prof. Dr. Oliver Bendel – with the help of netiquettes that she has collected and made available to the chatbot. The chatbot is tamed, the tiger becomes a house cat that can be used without danger in the classroom, for example. With GPT-4o, guardrails have already been introduced beforehand. These were programmed in or obtained via reinforcement learning from human feedback. So, strictly speaking, you turn a tamed tiger into a house cat. This is different with certain open source language models. The wild animal must first be captured and then tamed. And even then it can seriously injure you. But even with GPTs there are pitfalls, and as we know, house tigers can hiss and scratch. The results of the project will be available in August. Moral prompt engineering had already been applied to Data, a chatbot for the Data Science course at the FHNW School of Engineering (Image: Ideogram).

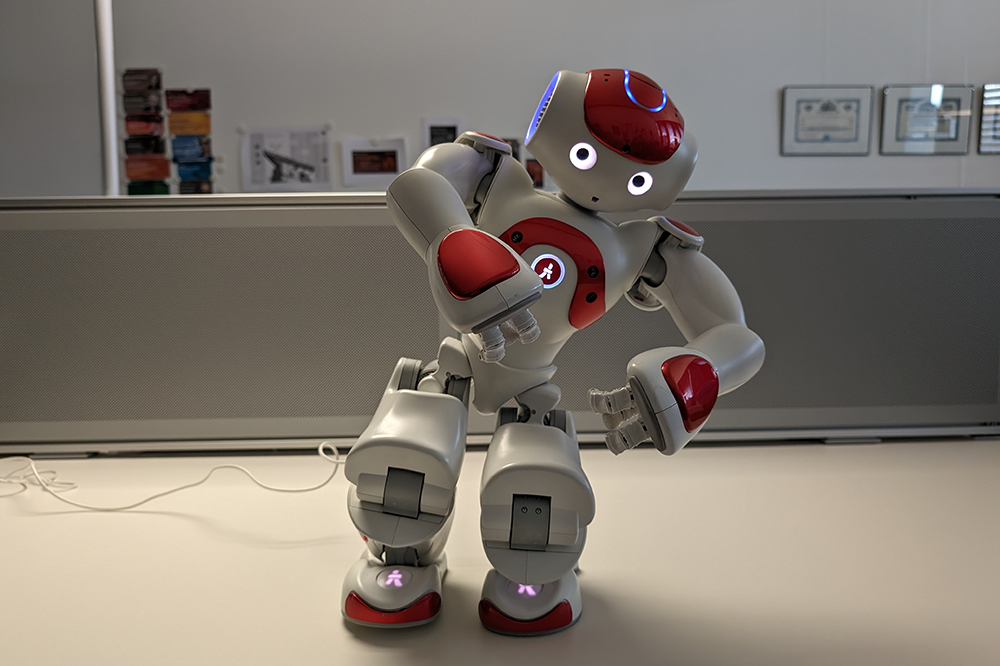

25 Artifacts and Concepts of ME and SR

Since 2012, on the initiative of Oliver Bendel, 25 concepts and artifacts of machine ethics and social robotics have been created to illustrate an idea or make its implementation clear. These include conversational agents such as GOODBOT, LIEBOT, BESTBOT, and SPACE THEA, which have been presented at conferences, in journals and in the media, and animal-friendly machines such as LADYBIRD and HAPPY HEDGEHOG, which have been covered in books such as “Die Grundfragen der Maschinenethik” by Catrin Misselhorn and on Indian, Chinese and American platforms. Most recently, two chatbots were created for a dead and an endangered language, namely @ve (for Latin) and @llegra (for Vallader, an idiom of Rhaeto-Romanic). The CAIBOT project will be continued in 2024. In this project, a language model is to be transformed into a moral machine with the help of prompt engineering or fine-tuning, following the example of Claude von Anthropic. In the “The Animal Whisperer” project, an app is to be developed that understands the body language of selected animals and also assesses their environment with the aim of providing advice on how to treat them. In the field of machine ethics, Oliver Bendel and his changing teams are probably among the most active groups worldwide.

AAAI Spring Symposia Return to Stanford

In late August 2023, AAAI announced the continuation of the AAAI Spring Symposium Series, to be held at Stanford University from 25-27 March 2024. Due to staff shortages, the prestigious conference had to be held at the Hyatt Regency SFO Airport in San Francisco in 2023 – and will now return to its traditional venue. The call for proposals is available on the AAAI Spring Symposium Series page. Proposals are due by 6 October 2023. They should be submitted to the symposium co-chairs, Christopher Geib (SIFT, USA) and Ron Petrick (Heriot-Watt University, UK), via the online submission page. Over the past ten years, the AAAI Spring Symposia have been relevant not only to classical AI, but also to roboethics and machine ethics. Groundbreaking symposia were, for example, “Ethical and Moral Considerations in Non-Human Agents” in 2016, “AI for Social Good” in 2017, or “AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents” in 2018. More information is available at aaai.org/conference/spring-symposia/sss24/.

LaborDigital Conference at the ZHdK

The LaborDigital conference at the Zurich University of the Arts (ZHdK) will take place on February 10, 2023 in English and German. It was initiated and organized by Charlotte Axelsson and others. The conference will open with a lecture by Prof. Dr. Johan Frederik Hartle, Rector of the Academy of Fine Arts Vienna. This will be followed by the keynote “Labor-Geschichte/s. On the Archaeology of a ‘Creative’ Space” by Prof. Dr. Oliver Ruf from the Bonn-Rhein-Sieg University of Applied Sciences. From 11:00 to 12:30, three Experimental Learning Labs will take place in parallel, namely “Artifacts of Machine Ethics” with Prof. Dr. Oliver Bendel (FHNW, Muttenz, Olten and Brugg-Windisch), “Dance Lab & Avatar” with Regina Bäck (Munich), and “Experimental Game Cultures Labs” with Prof. Dr. Margarete Jahrmann (University of Applied Arts Vienna). Lunch will be followed by ZHdK Lab Visits and more Experimental Learning Labs starting at 3:30 pm. At 4:30 p.m., Raphaële Bidault-Waddington, founder of the LIID Future Lab in Paris, will deliver the second keynote, titled “Designing Art-based Future Labs.” Johan Frederik Hartle will conclude the conference with further remarks. For more information, visit paul.zhdk.ch/course/view.php?id=2312.

The CARE-MOMO Project

Two of the most important conferences for social robotics are Robophilosophy and ICSR. After Robophilosophy, a biennial, was last held in Helsinki in August 2022, ICSR is now coming up in Florence (13 – 16 December 2022). “The 14th International Conference on Social Robotics (ICSR 2022) brings together researchers and practitioners working on the interaction between humans and intelligent robots and on the integration of social robots into our society. … The theme of this year’s conference is Social Robots for Assisted Living and Healthcare, emphasising on the increasing importance of social robotics in human daily living and society.” (Website ICSR) The committee sent out notifications by October 15, 2022. The paper “The CARE-MOMO Project” by Oliver Bendel and Marc Heimann was accepted. This is a project that combines machine ethics and social robotics. The invention of the morality menu was transferred to a care robot for the first time. The care recipient can use sliders on the display to determine how he or she wants to be treated. This allows them to transfer their moral and social beliefs and ideas to the machine. The morality module (MOMO) is intended for the Lio assistance robot from F&P Robotics. The result will be presented at the end of October 2022 at the company headquarters in Glattbrugg near Zurich. More information on the conference via www.icsr2022.it.