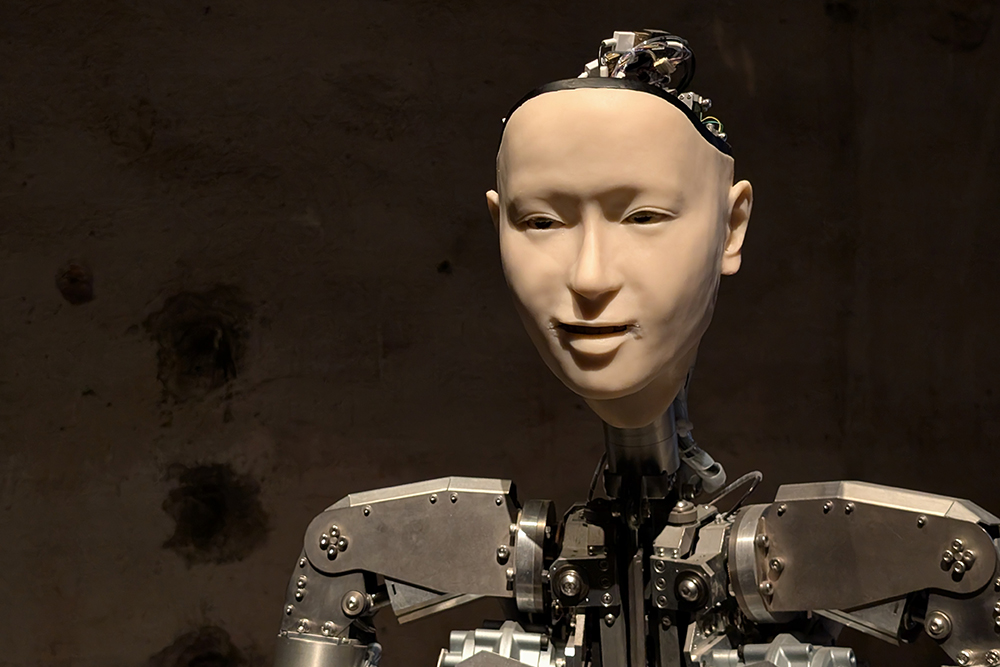

The installation entitled “Am I a Strange Loop?” will be on display at the 2025 Architecture Biennale in Venice. It raises the question of whether artificial intelligence can develop a form of self-awareness. The installation features the humanoid robot Alter3, which has mimic, gestural, and verbal abilities. It uses GPT-4 or GPT-5. Visitors can communicate with it in different languages via a microphone. The installation draws on ideas from physicist, computer scientist, and cognitive scientist Douglas Hofstadter, who assumed that consciousness arises when a system reflects on itself. Alter3 is an impressive robot with a silicone face and silicone hands, but otherwise has a machine-like presence. GPT-4, GPT-5, or other language models cannot create either world consciousness or self-awareness.

About Inclusive Robotics

The Gabler Wirtschaftslexikon is the largest economics encyclopedia in the German-speaking world. In March 2025, Oliver Bendel published an article on inclusive robotics in this reference work. The first part reads as follows: “Inclusive robotics designs, develops, controls, manufactures, and operates robotic solutions for individuals with disabilities or impairments. The goal is to enable them to fully participate in social life and to engage in education, culture, and sports. This can involve the replacement or enhancement of a body part, as well as the use of a robot that provides assistance (an assistive robot or companion robot) or is worn on or with the body (a wearable robot). Robotic quadrupeds and bipedal general-purpose robots are becoming increasingly relevant. In the future, these will function as universal robots – true generalists. Inclusive Robotics addresses not only physical aspects but also psychological ones. It is closely related to inclusive AI.” The full article is available at: wirtschaftslexikon.gabler.de/definition/inclusive-robotics-172663 (Photo; ETH Zurich, Cybathlon/Alessandro Della Bella).

Keynote by David Chalmers

On the second day of Robophilosophy 2024, David Chalmers, an Australian philosopher and cognitive scientist, gave a keynote speech entitled “Does Generative AI Extend the Mind?”. From the website: “Prof. Chalmers is known worldwide, also outside of philosophy, for his contributions to consciousness research in ‘The Character of Consciousness’ (2010), and in particular for identifying more clearly than anyone the tasks for a philosophical account of consciousness (‘the hard problem’ of consciousness). Since recent advances in AI bring Chalmers’ thought experiment of ‘philosophical zombies’ closer to reality, in his current research he combines philosophy and a longstanding interest in AI for the exploration of the status of virtual reality … and the likelihood of creating artificial systems that fulfil the criteria of consciousness.” (Website Robophilosophy 2024) A key statement was: “As social AI systems become increasingly agent-like, they will decreasingly extend the mind …” The final statement was: “The quest for agency and sociality in robotsmay stand in tension with considerations of both efficiency and morality.” The audience applauded the witty and humorous talk.

AAAI Spring Symposia Return to Stanford

In late August 2023, AAAI announced the continuation of the AAAI Spring Symposium Series, to be held at Stanford University from 25-27 March 2024. Due to staff shortages, the prestigious conference had to be held at the Hyatt Regency SFO Airport in San Francisco in 2023 – and will now return to its traditional venue. The call for proposals is available on the AAAI Spring Symposium Series page. Proposals are due by 6 October 2023. They should be submitted to the symposium co-chairs, Christopher Geib (SIFT, USA) and Ron Petrick (Heriot-Watt University, UK), via the online submission page. Over the past ten years, the AAAI Spring Symposia have been relevant not only to classical AI, but also to roboethics and machine ethics. Groundbreaking symposia were, for example, “Ethical and Moral Considerations in Non-Human Agents” in 2016, “AI for Social Good” in 2017, or “AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents” in 2018. More information is available at aaai.org/conference/spring-symposia/sss24/.

Robots in Hawai’i – Part 1

Hawaii (Hawaiian: Hawai’i) is not necessarily associated with robots. But in the larger cities, you can be sure to encounter them. There are service robots such as the Keenon Dinerbot and surgical robots such as the da Vinci Surgical System. In addition, robots are used in the wild, for example for nature conservation. The hospital group in the east of the archipelago advertises with the following words: “East Hawaii Health Clinics is excited to introduce our fully operational robotic surgery center. This state-of-the-art equipment allows us to perform minimally invasive surgeries that would otherwise require greater surgery time and recovery time. We look forward to continuing to provide East Hawaii with exceptional surgical options.” (Website East Hawaii Health Clinics) This type of care is available in Hilo, among other places. The small town on the Big Island has the latest medical technology, such as that used in Basel and Berlin. Hilo is surrounded by tropical rainforest. Nearby are Akaka Falls and Hawai’i Volcanoes National Park. In the city itself, giant banyan trees grow. One must not forget the many homeless people who are at the end of their tether. Like California, Hawai’i has not dealt with this problem. These people will never be able to afford treatment with a surgical robot.

An Investigation of Robotic Hugs

From March 27-29, 2023, the AAAI 2023 Spring Symposia will feature the symposium “Socially Responsible AI for Well-being” by Takashi Kido (Teikyo University, Japan) and Keiki Takadama (The University of Electro-Communications, Japan). The venue is usually Stanford University. For staffing reasons, this year the conference will be held at the Hyatt Regency in San Francisco. On March 28, Prof. Dr. Oliver Bendel will present the paper “Increasing Well-being through Robotic Hugs”, written by himself, Andrea Puljic, Robin Heiz, Furkan Tömen, and Ivan De Paola. From the abstract: “This paper addresses the question of how to increase the acceptability of a robot hug and whether such a hug contributes to well-being. It combines the lead author’s own research with pioneering research by Alexis E. Block and Katherine J. Kuchenbecker. First, the basics of this area are laid out with particular attention to the work of the two scientists. The authors then present HUGGIE Project I, which largely consisted of an online survey with nearly 300 participants, followed by HUGGIE Project II, which involved building a hugging robot and testing it on 136 people. At the end, the results are linked to current research by Block and Kuchenbecker, who have equipped their hugging robot with artificial intelligence to better respond to the needs of subjects.” More information via aaai.org/conference/spring-symposia/sss23/.

How People React to Hugs from Robots

As part of the AAAI 2023 Spring Symposia in San Francisco, the symposium “Socially Responsible AI for Well-being” is organized by Takashi Kido (Teikyo University, Japan) and Keiki Takadama (The University of Electro-Communications, Japan). The paper “Increasing Well-being and Health through Robotic Hugs” by Oliver Bendel, Andrea Puljic, Robin Heiz, Furkan Tömen, and Ivan De Paola was accepted. Among other things, they show how people in Switzerland react to robotic hugs. The talk will take place between March 26 and 29, 2023 at Hyatt Regency, San Francisco Airport. The symposium website states: “For our happiness, AI is not enough to be productive in exponential growth or economic/financial supremacies but should be socially responsible from the viewpoint of fairness, transparency, accountability, reliability, safety, privacy, and security. For example, AI diagnosis system should provide responsible results (e.g., a high-accuracy of diagnostics result with an understandable explanation) but the results should be socially accepted (e.g., data for AI (machine learning) should not be biased (i.e., the amount of data for learning should be equal among races and/or locations). Like this example, a decision of AI affects our well-being, which suggests the importance of discussing ‘What is socially responsible?’ in several potential situations of well-being in the coming AI age.” (Website AAAI) According to the organizers, the first perspective is “(Individually) Responsible AI”, which aims to clarify what kinds of mechanisms or issues should be taken into consideration to design Responsible AI for well-being. The second perspective is “Socially Responsible AI”, which aims to clarify what kinds of mechanisms or issues should be taken into consideration to implement social aspects in Responsible AI for well-being. More information via www.aaai.org/Symposia/Spring/sss23.php#ss09.

LaborDigital Conference at the ZHdK

The LaborDigital conference at the Zurich University of the Arts (ZHdK) will take place on February 10, 2023 in English and German. It was initiated and organized by Charlotte Axelsson and others. The conference will open with a lecture by Prof. Dr. Johan Frederik Hartle, Rector of the Academy of Fine Arts Vienna. This will be followed by the keynote “Labor-Geschichte/s. On the Archaeology of a ‘Creative’ Space” by Prof. Dr. Oliver Ruf from the Bonn-Rhein-Sieg University of Applied Sciences. From 11:00 to 12:30, three Experimental Learning Labs will take place in parallel, namely “Artifacts of Machine Ethics” with Prof. Dr. Oliver Bendel (FHNW, Muttenz, Olten and Brugg-Windisch), “Dance Lab & Avatar” with Regina Bäck (Munich), and “Experimental Game Cultures Labs” with Prof. Dr. Margarete Jahrmann (University of Applied Arts Vienna). Lunch will be followed by ZHdK Lab Visits and more Experimental Learning Labs starting at 3:30 pm. At 4:30 p.m., Raphaële Bidault-Waddington, founder of the LIID Future Lab in Paris, will deliver the second keynote, titled “Designing Art-based Future Labs.” Johan Frederik Hartle will conclude the conference with further remarks. For more information, visit paul.zhdk.ch/course/view.php?id=2312.

AI for Well-being

As part of the AAAI 2023 Spring Symposia in San Francisco, the symposium “Socially Responsible AI for Well-being” is organized by Takashi Kido (Teikyo University, Japan) and Keiki Takadama (The University of Electro-Communications, Japan). The AAAI website states: “For our happiness, AI is not enough to be productive in exponential growth or economic/financial supremacies but should be socially responsible from the viewpoint of fairness, transparency, accountability, reliability, safety, privacy, and security. For example, AI diagnosis system should provide responsible results (e.g., a high-accuracy of diagnostics result with an understandable explanation) but the results should be socially accepted (e.g., data for AI (machine learning) should not be biased (i.e., the amount of data for learning should be equal among races and/or locations). Like this example, a decision of AI affects our well-being, which suggests the importance of discussing ‘What is socially responsible?’ in several potential situations of well-being in the coming AI age.” (Website AAAI) According to the organizers, the first perspective is “(Individually) Responsible AI”, which aims to clarify what kinds of mechanisms or issues should be taken into consideration to design Responsible AI for well-being. The second perspective is “Socially Responsible AI”, which aims to clarify what kinds of mechanisms or issues should be taken into consideration to implement social aspects in Responsible AI for well-being. More information via www.aaai.org/Symposia/Spring/sss23.php#ss09.

AAAI 2023 Spring Symposia in San Fran

The Association for the Advancement of Artificial Intelligence (AAAI) is pleased to present the AAAI 2023 Spring Symposia, to be held at the Hyatt Regency, San Francisco Airport, California, March 27-29. According to the organizers, Stanford University cannot act as host this time because of insufficient staff. Symposia of particular interest from a philosophical point of view are “AI Climate Tipping-Point Discovery”, “AI Trustworthiness Assessment”, “Computational Approaches to Scientific Discovery”, “Evaluation and Design of Generalist Systems (EDGeS): Challenges and methods for assessing the new generation of AI”, and “Socially Responsible AI for Well-being”. According to AAAI, symposia generally range from 40–75 participants each. “Participation will be open to active participants as well as other interested individuals on a first-come, first-served basis.” (Website AAAI) Over the past decade, the conference has become one of the most important venues in the world for discussions on robot ethics, machine ethics, and AI ethics. It will be held again at History Corner from 2024. Further information via www.aaai.org/Symposia/Spring/sss23.php.