As part of the ToBIT event series at the FHNW School of Business, four students of Prof. Dr. Oliver Bendel explored four topics related to his field of research during the Fall 2025/2026 semester: “The decoding of symbolic languages of animals”, “The decoding of animal body language”, “The decoding of animal facial expressions and behavior”, and “The decoding of extraterrestrial languages”. The students presented their papers on January 9, 2026. In some cases, the state of research was not only reviewed, but an independent position was also developed. The paper “The decoding of extraterrestrial languages” by Ilija Bralic argues that Messaging Extraterrestrial Intelligence (METI), in contrast to passive SETI (Search for Extraterrestrial Intelligence), creates a dangerous imbalance between humanity’s rapidly expanding technical capacity to send interstellar messages and its limited ethical, scientific, and political ability to govern this power responsibly. The central thesis is that the moral justifications for METI are speculative and anthropocentric, relying largely on optimistic assumptions about extraterrestrial behavior, while the potential risks are severe, logically grounded, and potentially existential. These risks include fundamental misinterpretation caused by the “human lens,” strategic dangers described by the Dark Forest hypothesis, historical patterns of harm in technologically asymmetric encounters, and profound cultural, psychological, and political disruption. The paper concludes that unilateral METI decisions by individuals or private groups are ethically indefensible and that, under the Precautionary Principle, humanity should immediately halt active transmissions. As a solution, it proposes a binding international governance framework, including a temporary global moratorium, the creation of a dedicated international authority, a strict multi-stage decision-making protocol, and robust transparency and monitoring mechanisms. This approach frames responsible restraint – not transmission – as humanity’s first genuine test of cosmic maturity.

AI and Human Creativity

As part of the AAAI Spring Symposia, the symposium “Will AI Light Up Human Creativity or Replace It?: Toward Well-Being AI for co-evolving human and machine intelligence” focuses on how advances in generative AI, large language models, and multi-agent systems are transforming human creativity and decision-making. It addresses the central question of whether AI will amplify human potential or increasingly take its place. The symposium advances the idea of Well-Being AI, emphasizing human-AI collaboration and co-evolution rather than AI as an isolated or autonomous system. While highlighting the potential of AI to support creativity, discovery, and personal development, it also examines risks such as overreliance, reduced diversity of thought, and loss of human autonomy. Chaired by Takashi Kido (see photo) of Teikyo University and Keiki Takadama of The University of Tokyo, the symposium brings together researchers and practitioners from technical, philosophical, and social disciplines to discuss principles and frameworks for AI that augments rather than replaces human creativity. Further information on this symposium and the broader AAAI Spring Symposia organized by the Association for the Advancement of Artificial Intelligence can be found at https://aaai.org/conference/spring-symposia/sss26/ and webpark2506.sakura.ne.jp/aaai/sss26-will-ai-light-up-human-creativity-or-replace-it/.

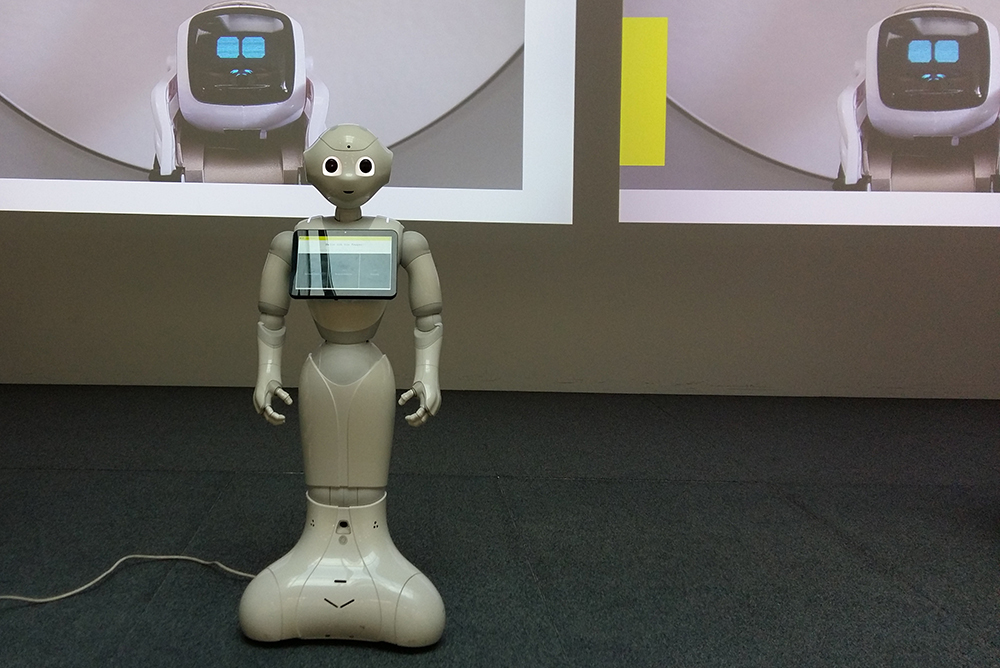

Talking to a Robot

The article “Small Talk with a Robot Reduces Stress and Improves Mood” by Katharina Kühne, Antonia L. Z. Klöffel, Oliver Bendel, and Martin H. Fischer was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part III.” From the abstract: “Research has demonstrated that social support is crucial in mitigating stress and enhancing mood. Not only do long-term, meaningful relationships contribute to well-being, but everyday social interactions, such as small talk, also offer psychological benefits. As social robots increasingly become more integrated into daily life, they present a potential avenue for stress interventions. In our online study, 98 participants underwent a stress induction using the Stroop task and were then assigned to one of three conditions: engaging in scripted small talk with a simulated NAO robot online, listening to a neutral story told by the same NAO robot, or no intervention (control condition). Results indicate that both interventions effectively reduced stress, with a tendency towards a stronger effect in the Small talk condition. Small talk not only helped maintain positive affect but also reduced negative affect. Notably, the benefits were more pronounced among individuals experiencing higher acute stress following the stress induction, but were less evident in those with chronically elevated stress levels. Furthermore, the effect of the intervention on stress reduction was mediated by changes in positive affect. These findings suggest that small talk with a social robot may serve as a promising tool for stress reduction and affect regulation.” The first author, a researcher from the University of Potsdam, presented the paper on September 12, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2398-6_1.

Robots in Space

The article “Wearable Social Robots in Space” by Tamara Siegmann and Oliver Bendel was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part I.” From the abstract: “Social robots have been developed on Earth since the 1990s. This article shows that they can also provide added value in space – particularly on a manned flight to Mars. The focus in this paper is on wearable social robots, which seem to be an obvious type due to their small size and low weight. First, the environment and situation of the astronauts are described. Then, using AIBI as an example, it is shown how it fits into these conditions and requirements and what tasks it can perform. Possible further developments and improvements of a wearable social robot are also mentioned in this context. It becomes clear that a model like AIBI is well suited to accompany astronauts on a Mars flight. However, further developments and improvements in interaction and communication are desirable before application.” The Swiss student presented the paper together with her professor on September 10, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2379-5_33.

Navel, please come to me

Contact between Navel Robotics and the FHNW School of Business has existed since March 2021. At that time, it had not yet been decided whether Navel, the social robot, should be equipped with a spherical body or with wheels for locomotion. Claude Toussaint, the founder and inventor, discussed this and other questions with Prof. Dr. Oliver Bendel. He decided in favor of wheels, unlike the Mirokaï models by Enchanted Tools – the female Miroka and the male Miroki – which today zip around like BB-8. Navel stands out for its large, seemingly round eyes. This effect is created by a special lens, as the displays themselves are not curved. In the case of the Mirokaï robots, the face – including eyes, nose, mouth, and facial expressions – is projected onto a face shell, similar to the Furhat heads. The contact between the company and the university continued. On February 18, 2024, Claude Toussaint joined the elective module “Social Robots” remotely, and on June 6, 2024, he was a guest at the Future Lab hosted by Oliver Bendel at Learntec. In November 2025, Navel Robotics was able to announce on its website: “Many customers have been waiting for this moment – we have too … From now on, Navel will gradually learn to move independently in environments such as stations and residential areas. ‘Navel, please come to me’ – the first step toward autonomous driving.” (Navel Robotics website, own translation) In nursing homes and assisted-living facilities, social robots of this kind can be useful, as can assistive robots such as Lio, which are capable of physically manipulating objects. Miroka and Miroki combine both worlds: they are advanced assistive robots and fully fledged social robots. The future will show which approaches prevail (Photo: ICSR).

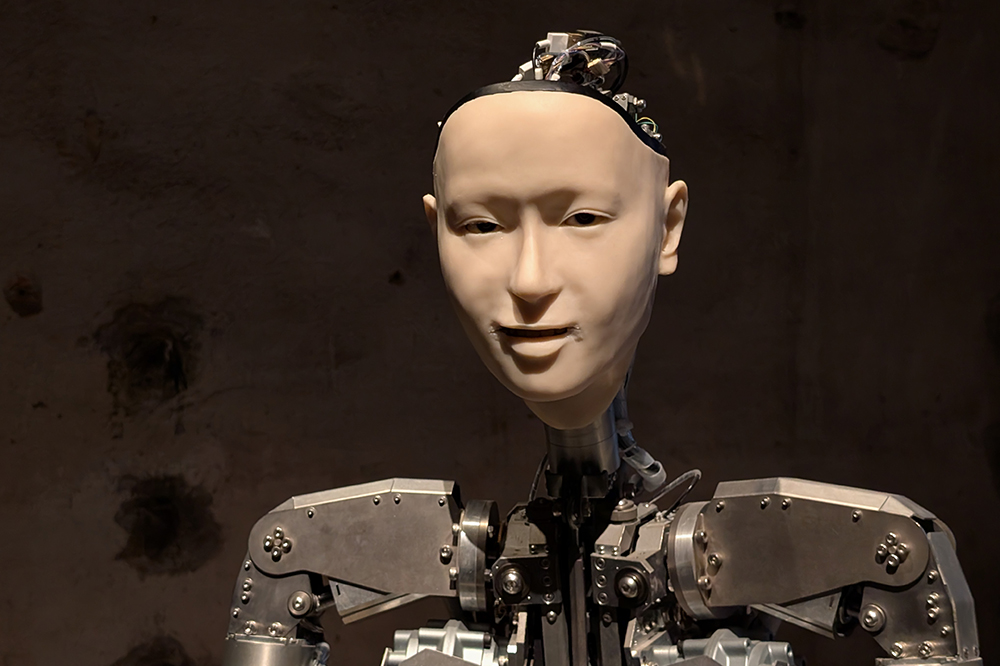

Human-likeness in AI

The Research Topic “Exploring human-likeness in AI: From perception to ethics and interaction dynamics”, hosted by Frontiers in Cognition, invites contributions on how human-like features in robots and AI systems influence user perception, trust, interaction, and ethical considerations. The deadline for submission has been extended. As AI becomes more integrated into society, anthropomorphic design raises pressing questions: Do human-like traits improve communication and acceptance, or do they lead to unrealistic expectations? What ethical implications arise when machines simulate empathy or emotion? This interdisciplinary call welcomes contributions from fields such as psychology, engineering, philosophy, and education. Submissions may include empirical research, theoretical analysis, reviews, or case studies that explore how human-likeness shapes the way we engage with AI. The deadline for manuscript summaries is February 12, 2026; full manuscripts are due by March 12, 2026. Articles will undergo peer review and are subject to publication fees upon acceptance. Topic editors are Dr. Katharina Kühne (University of Potsdam, Germany) and Prof. Dr. Roger K. Moore (The University of Sheffield, United Kingdom). For full details and submission guidelines, visit: www.frontiersin.org/research-topics/72370/exploring-human-likeness-in-ai-from-perception-to-ethics-and-interaction-dynamics.

Sexuality and Generative AI

The upcoming “SAGA: Sexuality and Generative AI” symposium, taking place on April 30, 2026 at the Université du Québec à Montréal, explores how generative AI is reshaping intimacy, desire, relationships, and sexual expression. As AI systems increasingly create images, stories, and even interactive romantic companions, they raise new ethical, legal, and social questions that researchers, practitioners, and industry voices will tackle together. The hybrid, bilingual event is free and open to all, offering live translation and captioning. In its first block on social impacts, the symposium features talks such as Brian Willoughby’s exploration of how romantic AI affects young adults’ relationships, Arnaud Anciaux’s analysis of ownership and consent around AI-generated sexual content, Beáta Bőthe’s work on AI-generated pornography and problematic use, and Oliver Bendel’s look at erotic chatbot interactions. With discussions ranging from the regulation of AI-generated pornography to digital intimacy and AI-supported therapeutic interventions, the event promises a rich and essential conversation. Full details and submissions are available at https://event.fourwaves.com/sexualiteia/pages.

Communicating with Fellow Beings and Extraterrestrials

As part of the ToBIT event series at the University of Applied Sciences and Arts Northwestern Switzerland (FHNW), four students working with Prof. Dr. Oliver Bendel are addressing four topics within his research area: “The Decoding of Symbolic Languages of Animals”, “The Decoding of Animal Body Language”, “The Decoding of Animal Facial Expressions and Behavior”, and “The Decoding of Extraterrestrial Languages”. The first three topics are also being explored – or have already been explored – in dedicated projects. DEEP VOICE focuses on whale communication. The Animal Whisperer Project comprised three apps that analyzed and evaluated the body language of cows, horses, and dogs, while VISUAL provided blind and visually impaired individuals with audio descriptions of images from wildlife webcams. In ANIFACE, a system was designed to identify individual bears in the Alps using facial recognition. Projects involving the reception of extraterrestrial signals and communication with alien life forms were discussed in the book “300 Keywords Weltraum“. The students presented their interim results on November 26, 2025. The final ToBIT event will take place in January 2026.

ICSR – Call for Competition Entries

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from 1-4 July 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. As part of this edition, the ICSR 2026 Competition invites visionary concepts and prototypes for social robots that collaborate, care, and connect with people beyond the laboratory. Designers, engineers, artists, researchers, and pupils or students (school, college, and university) are invited to submit projects ranging from functional solutions to artistic or hybrid works. The competition features two categories: the Robot Design Competition, focusing on innovation in functionality, interaction, and application; and the Robot Art Competition, highlighting creative fusions of fashion, art, performance, and robotics. Hybrid projects may apply to both awards. Each entry must be described in a summary of up to two pages (preferably following Springer LNAI formatting), including an abstract of no more than 50 words and sufficient detail to judge novelty and impact. A single optional video link (maximum three minutes) and images or renderings are encouraged. Submissions should indicate whether they apply for the Design Award, the Art Award, or both, and be uploaded via the competition form at: icsr2026.uk/competition/. The competition submission deadline is 1 March 2026; finalists will be notified on 15 April 2026, and winners will be announced on 3 July 2026 during the closing ceremony of ICSR 2026.

AI Systems Harm the German Language

Users who translate texts from English or another language into German and are not native speakers of the target language should be cautious when using services such as DeepL and ChatGPT. 1. For both, the default setting is not the standard language, as one might assume, but a special language that is rejected by the majority of the language community and does not follow the official rules. These are determined for all German-speaking countries by the Rechtschreibrat. DeepL and ChatGPT follow their own rules or the inconsistent ideas of activists. The German language generated by DeepL and ChatGPT is often dysfunctional, incorrect, and imprecise. Formal inaccuracies can lead to inaccuracies in content. 2. If AI systems do not know words, they may simply replace them with completely different ones. In one test, DeepL translated “Animal-Computer Interaction” as “Mensch-Computer-Interaktion” (“Human-Computer Interaction”). This made the text factually incorrect. 3. Overall, especially with ChatGPT, English language structures are transferred to German. This results in unnatural-sounding lists, unlinked compounds (“Deep Learning Modelle” or “Deep Learning-Modelle” instead of “Deep-Learning-Modelle”), and unnecessary or incorrect hyphens (“nicht-amtliche Regeln” instead of “nichtamtliche Regeln”).