On October 24, 2025, the kick-off meeting for the ECHO project took place at the FHNW School of Business. Two weeks later, on November 7, the proposal was approved. Project collaborator is BIT student Lucas Chingis Marty, who is writing his thesis on this topic. The initiator is Prof. Dr. Oliver Bendel. ECHO is an MLLM-based chatbot that introduces children, young people, and laypeople to the world of music. It can listen to, describe, and evaluate pieces and songs. To do this, it is equipped with a powerful audio analysis module. It refers to key, melody, and harmony, among other things. ECHO makes music accessible and understandable without requiring any prior knowledge. The aim is to promote curiosity, listening comprehension, and artistic taste. The prototype is expected to be available in February 2026.

Incorrect Translations of ChatGPT

Many users notice the over-correct or unidiomatic language of ChatGPT in German. This is probably due to the fact that the model is based on multilingual structures when generating and sometimes uncritically transfers English-language patterns to German. The problem can be found in several other errors and deviations. Oliver Bendel has compiled an overview of these. This is a first draft, which will be gradually revised and expanded. He considers the deliberate interventions made by OpenAI to be particularly worrying. For example, the use of gender language, which is a special language, stems from the principles that are implemented at different levels. The default setting can theoretically be switched off via prompts, but in fact ChatGPT often ignores it, even for Plus users who have always excluded gender language. The American company is thus siding with those who force people to use the special language – with numerous media, publishers, and universities.

Miss Tammy in the AAAI Proceedings

On May 28, 2025, the “Proceedings of the 2025 AAAI Spring Symposium Series” (Vol. 5 No. 1) were published. Oliver Bendel was involved in two papers at the symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The paper “Miss Tammy as a Use Case for Moral Prompt Engineering” by Myriam Rellstab and Oliver Bendel is summarized as follows: “This paper describes an LLM-based chatbot as a use case for moral prompt engineering. Miss Tammy, as it is called, was created between February 2024 and February 2025 at the FHNW School of Business as a custom GPT. Different types of prompt engineering were used. In addition, RAG was applied by building a knowledge base with a collection of netiquettes. These usually guide the behavior of users in communities but also seem to be useful to control the actions of chatbots and make them competent in relation to the behavior of humans. The tests with pupils aged between 14 and 16 showed that the custom GPT had significant advantages over the standard GPT-4o model in terms of politeness, appropriateness, and clarity. It is suitable for de-escalating conflicts and steering dialogues in the right direction. It can therefore contribute to users’ well-being and is a step forward in human-compatible AI.” The renowned and traditional conference took place from March 31 to April 2, 2025 in San Francisco. The proceedings are available at ojs.aaai.org/index.php/AAAI-SS/issue/view/654.

Image Synthesis from an Ethical Perspective

The article “Image Synthesis from an Ethical Perspective” by Prof. Dr. Oliver Bendel was published as an electronic version in the journal AI & SOCIETY on September 27, 2023. It addresses the ethical implications of image generators, i.e., a specific form of generative AI. It can now also be found in the current print edition from February 2025 (Volume 40, Issue 2). From the abstract: “Generative AI has gained a lot of attention in society, business, and science. This trend has increased since 2018, and the big breakthrough came in 2022. In particular, AI-based text and image generators are now widely used. This raises a variety of ethical issues. The present paper first gives an introduction to generative AI and then to applied ethics in this context. Three specific image generators are presented: DALL-E 2, Stable Diffusion, and Midjourney. The author goes into technical details and basic principles, and compares their similarities and differences. This is followed by an ethical discussion. The paper addresses not only risks, but opportunities for generative AI. A summary with an outlook rounds off the article.” A lot has happened with image generators since 2023. The new one from OpenAI now also allows photorealistic images, and it has fewer problems with average-looking people – DALL-E 2 and 3 favored beauty over mediocrity and ugliness. The article can be downloaded from link.springer.com/article/10.1007/s00146-023-01780-4 (Image: DALL-E 3).

22 Chatbots and Voice Assistants

Since 2013, Oliver Bendel has developed 22 chatbots and voice assistants together with his students or colleagues. They can be divided into three categories. The first are moral and immoral chatbots (i.e., forms of moral machines) and empathic voice assistants. The second are chatbots (some with voice output) for dead, endangered, or extinct languages and idioms. The third are pedagogical chatbots and chatbots that give recommendations and advice. Some of the projects lasted between four and six months. Most of the GPTs were created in just a few hours. Exceptions are Miss Tammy and Animal Whisperer, which took several months to build with the help of prompt engineering and retrieval-augmented generation (RAG). Articles and book chapters have been published on many of the projects. The names of the developers can be found in these. A few chatbots made it into the media, such as GOODBOT (for which the preparatory work began in 2012), LÜGENBOT aka LIEBOT, and @llegra.

kAIxo says “kaixo”

The final presentation of the “kAIxo” project took place on January 9, 2025. Nicolas Lluis Araya was the project team member. The FHNW School of Business has been developing chatbots for dead, endangered, and extinct languages for several years. A well-known example is @llegra, a chatbot for Vallader. In the spring of 2024, Oliver Bendel tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 12, 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the “kAIxo” project (the Basque “kaixo” corresponds to the English “hello”), the chatbot kAIxo was built, which speaks Basque. Its purpose is to keep users practicing written or spoken language or to develop the desire to learn the endangered language. The chatbot is based on GPT-4o and Gemini 1.5 Flash, and the user can select his or her preferred large language model (LLM). Retrieval-augmented Generation (RAG) plays a central role. The ChatSubs dataset is used, which contains subtitles of movie dialogs in Basque. Thanks to a text-to-speech engine, the chatbot can also speak. At the final presentation, Nicolas Lluis Araya presented a working prototype that can be accessed via www.kaixo.ch.

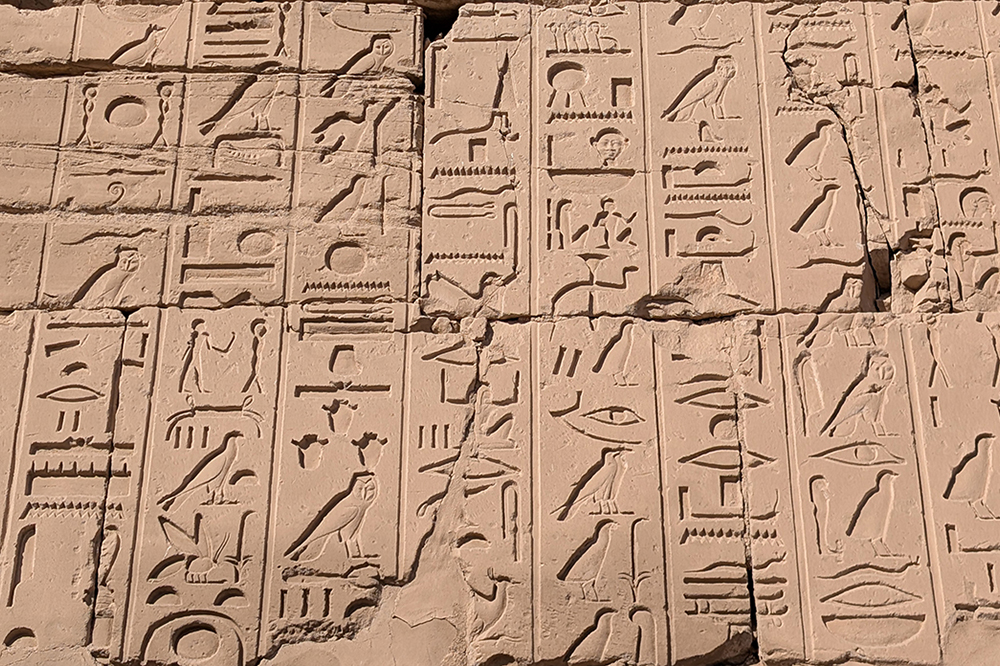

Cleop@tr@ Visits the Karnak Temple

Cleop@tr@ was invented by Prof. Dr. Oliver Bendel in May 2024. It is a GPT that specializes in Egyptian. It is also familiar with the culture and history of ancient Egypt. Since 2012, the technology philosopher and information systems specialist has been building chatbots and voice assistants – partly with his students and partly on his own. These have been discussed by the media and found interesting by NASA. Under his supervision, Karim N’diaye developed the chatbot @ve for Latin, Dalil Jabou the voice-enhanced chatbot @llegra for Vallader, and Nicolas Lluis Araya the voice-enhanced chatbot kAIxo for Basque. For some time now, he has been testing the reach of GPTs for endangered languages such as Irish, Maori, and Basque. He is also investigating the potential for extinct languages such as Egyptian (Cleop@tr@) and Akkadian (H@mmur@pi). The GPTs do not readily communicate in hieroglyphics and cuneiform, but they can certainly represent and explain signs of visual languages. It is even possible to enter entire sentences and then ask how they can be improved or what they mean. In December 2024, Oliver Bendel tested his Cleop@tr@ in the Karnak Temple in Luxor. She was able to provide coherent explanations and translations for several inscriptions on columns and walls. However, further tests also revealed clear errors. Ultimately, Egyptologists will have to assess how reliable it is.

Grandma Daisy Tricks Phone Scammers

“Human-like AIs have brought plenty of justifiable concerns about their ability to replace human workers, but a company is turning the tech against one of humanity’s biggest scourges: phone scammers. The AI imitates the criminals’ most popular target, a senior citizen, who keeps the fraudsters on the phone as long as possible in conversations that go nowhere, à la Grandpa Simpson.” (Techspot, 14 November 2024) This is reported by Techspot in an article from November 14, 2024. In this case, an AI grandmother is sicced on the fraudsters. “The creation of O2, the UK’s largest mobile network operator, Daisy, or dAIsy, is an AI created to trick scammers into thinking they are talking to a real grandmother who likes to ramble. If and when the AI does hand over the demanded bank details, it reads out fake numbers and names.” (Techspot, 14 November 2024) Daisy works by listening to a caller and transcribing his or her voice to text. Responses are generated by a large language model (LLM), complete with character personality layer, and then fed back through a custom AI text-to-speech model to generate a voice response. All this happens in real time, as the magazine reports. As phone scammers use more and more AI in their calls, you will soon find AI systems trying to outsmart each other.

The Animal Whisperer at ACI 2024

The paper “The Animal Whisperer Project” by Oliver Bendel and Nick Zbinden will be presented at ACI 2024, which takes place in Glasgow this December. It is a conference that brings together the small community of experts in animal-computer interaction and animal-machine interaction. This includes Oliver Bendel, who has been researching in this field since 2012, with a background in animal ethics from the 1980s and 1990s. He initiated the Animal Whisperer project. The developer was Nick Zbinden, who graduated from the FHNW School of Business. From March 2024, three apps were created on the basis of GPT-4: the Cow Whisperer, the Horse Whisperer, and the Dog Whisperer. They can be used to analyze the body language, behaviour, and environment of cows, horses, and dogs. The aim is to avert danger to humans and animals. For example, a hiker can receive a recommendation on his or her smartphone not to cross a pasture if a mother cow and her calves are present. All he or she has to do is call up the application and take photos of the surroundings. The three apps are available as prototypes since August 2024. With the help of prompt engineering and retrieval-augmented generation (RAG), they have been given extensive knowledge and skills. Above all, self-created and labeled photos were used. In the majority of cases, the apps correctly describe the animals’ body language and behavior. Their recommendations for human behavior are also adequate (Image: DALL-E 3).

Start of the kAIxo Project

Chatbots for dead, endangered, and extinct languages are being developed at the FHNW School of Business. One well-known example is @llegra, a chatbot for Vallader. Oliver Bendel recently tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 12, 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the kAIxo project (the Basque “kaixo” corresponds to the english “hello”), the chatbot or voice assistant kAIxo is to be developed that speaks Basque. The purpose is to keep users practicing written or spoken language or to develop the desire to learn the endangered language. The chatbot should be based on a Large Language Model (LLM). Both prompt engineering and fine-tuning are conceivable for customization. Retrieval Augmented Generation (RAG) can play a central role. The result will be a functioning prototype. Nicolas Lluis Araya, a student of business informatics, has been recruited to implement the project. The kick-off meeting will take place on September 3, 2024.