A workshop will be held at the University of Manchester on 11 November 2024 that can be located in the field of machine ethics. The following information can be found on the website: “Recent advances in artificial intelligence have led to a range of concerns about the ethical impact of the technology. This includes concerns about the day-to-day behaviour of robotic systems that will interact with humans in workplaces, homes and hospitals. One of the themes of these concerns is the need for such systems to take ethics into account when reasoning. This has generated new interest in how we can specify, implement and validate ethical reasoning.” (Website iFM 2024) The aim of this workshop, to be held in conjunction with iFM 2024, would be to explore formal approaches to these issues. Submission deadline is 8 August, notification is 12 September. More information at ifm2024.cs.manchester.ac.uk/fear.html.

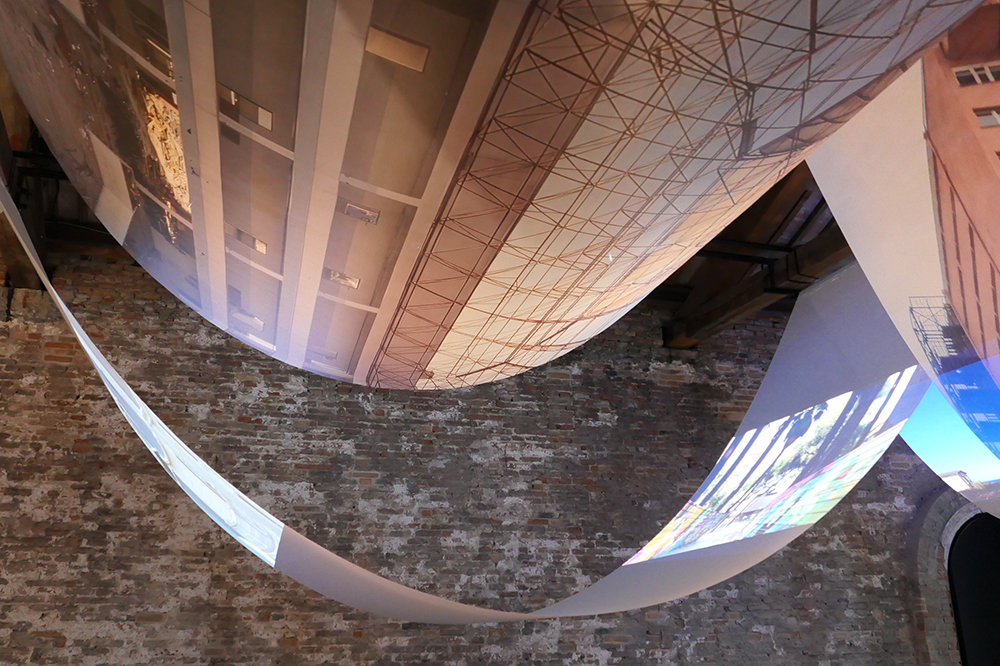

Ghost Stories in Venice

The Turkish Pavilion at the 2023 Venice Architecture Biennale (Biennale Architettura 2023) is still a magnet for many spectators in October. It has hung a series of cloud-like fabrics that reflect the many abandoned buildings in Turkey and explore possible new ways of transformation in the face of the country’s catastrophic earthquakes. The exhibition entitled “Ghost Stories: The Carrier Bag Theory of Architecture” is curated by Sevince Bayrak and Oral Göktaş. It seeks new ways of transformation through the use of new technologies such as artificial intelligence (AI) and machine learning. The World Architecture plattform (worldarchitecture.org) writes: “Using AI as a tool, trained in their architectural vocabulary, Bayrak and Göktaş have realised new hopeful visions for each of the uninhabited buildings, showing how people can repopulate and repurpose these spaces for the benefit of the community.” (World Architecture, 9 June 2023) Artificial intelligence is becoming increasingly important for architecture. With DALL-E 3 and other image generators, one can represent houses and design city views, in the form of photorealistic, colorful views or of white models that reduce complexity. The Venice Architecture Biennale will run until 26 November 2023. Further information is available at www.labiennale.org/en/architecture/2023.

@llegra, a Chatbot for Vallader

Conversational agents have been a research subject of Prof. Dr. Oliver Bendel for a quarter of a century. He dedicated his doctoral thesis at the University of St. Gallen to them. At the School of Business FHNW, he developed them with his changing teams from 2012 to 2022, primarily in the context of machine ethics and social robotics. The philosopher of technology now devotes himself increasingly to dead, extinct, and endangered languages. After @ve (2022), a chatbot for Latin based on GPT-3, another project started in March 2023. The chatbot @llegra is developed by Dalil Jabou for the Rhaeto-Romanic idiom Vallader, which occurs in the Lower Engadine between Martina in the northeast and Zernez in the southwest, as well as in Val Müstair. The user can type text and gets text output. In addition, @llegra speaks with the help of a text-to-speech system from the company SlowSoft, which supports the project. The GPT-3 speech model produced rather unsatisfactory results. The breakthrough then came with the use of GPT-4. The knowledge base was supplemented with the help of four children’s books on Vallader. The project will be completed in August 2023. The results will be published thereafter.

Robot Hand Can Operate in the Dark

“Researchers at Columbia Engineering have demonstrated a highly dexterous robot hand, one that combines an advanced sense of touch with motor learning algorithms in order to achieve a high level of dexterity.” (Website Columbia Engineering, 28 April 2023) Columbia Engineering reported this on its website on April 28, 2023. The text goes on to say: “As a demonstration of skill, the team chose a difficult manipulation task: executing an arbitrarily large rotation of an unevenly shaped grasped object in hand while always maintaining the object in a stable, secure hold. This is a very difficult task because it requires constant repositioning of a subset of fingers, while the other fingers have to keep the object stable. Not only was the hand able to perform this task, but it also did it without any visual feedback whatsoever, based solely on touch sensing.” (Website Columbia Engineering, 28 April 2023) “While our demonstration was on a proof-of-concept task, meant to illustrate the capabilities of the hand, we believe that this level of dexterity will open up entirely new applications for robotic manipulation in the real world”, said Matei Ciocarlie according to the website. He is the Associate Professor in the Departments of Mechanical Engineering and Computer Science who developed the hand together with his graduate student Gagan Khandate (Photo: Columbia University ROAM Lab).

Introducing Visual ChatGPT

Researchers at Microsoft are working on a new application based on ChatGPT and solutions like Stable Diffusion. Visual ChatGPT is designed to allow users to generate images using text input and then edit individual elements. In their paper “Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models” Chenfei Wu and his co-authors write: “We build a system called Visual ChatGPT, incorporating different Visual Foundation Models, to enable the user to interact with ChatGPT by 1) sending and receiving not only languages but also images 2) providing complex visual questions or visual editing instructions that require the collaboration of multiple AI models with multi-steps” – and, not to forget: “3) providing feedback and asking for corrected results” (Wu et al. 2023). For example, one lets an appropriate prompt create an image of a landscape, with blue sky, hills, meadows, flowers, and trees. Then, one instructs Visual ChatGPT with another prompt to make the hills higher and the sky more dusky and cloudy. One can also ask the program what color the flowers are and color them with another prompt. A final prompt makes the trees in the foreground appear greener. The paper can be downloaded from arxiv.org.

Programming Machine Ethics

The book “Programming Machine Ethics” (2016) by Luís Moniz Pereira and Ari Saptawijaya is available for free download from Z-Library. Luís Moniz Pereira is among the best-known machine ethicists. “This book addresses the fundamentals of machine ethics. It discusses abilities required for ethical machine reasoning and the programming features that enable them. It connects ethics, psychological ethical processes, and machine implemented procedures. From a technical point of view, the book uses logic programming and evolutionary game theory to model and link the individual and collective moral realms. It also reports on the results of experiments performed using several model implementations. Opening specific and promising inroads into the terra incognita of machine ethics, the authors define here new tools and describe a variety of program-tested moral applications and implemented systems. In addition, they provide alternative readings paths, allowing readers to best focus on their specific interests and to explore the concepts at different levels of detail.” (Information by Springer) The download link is eu1lib.vip/book/2677910/9fd009.

Hold a Video Call Naked Without Trouble

A software developer has created a tool that retouches pants on lower body to protect from embarrassing situations. He presents his solution in his video channel Everything Is Hacked on YouTube, under the title “I made a Zoom filter to add pants when you forget to wear them”. Under the video the software developer writes: “Using Python, OpenCV, MediaPipe, and pyvirtualcam to create a Zoom (or Teams or Hangouts or whatever) video filter to blur out your lower half or add customizable pants. This should work on any platform + video call app, as well as on recordings.” (Everything Is Hacked, May 10, 2022) You can watch the video here. The code is available at https://github.com/everythingishacked/Pants …

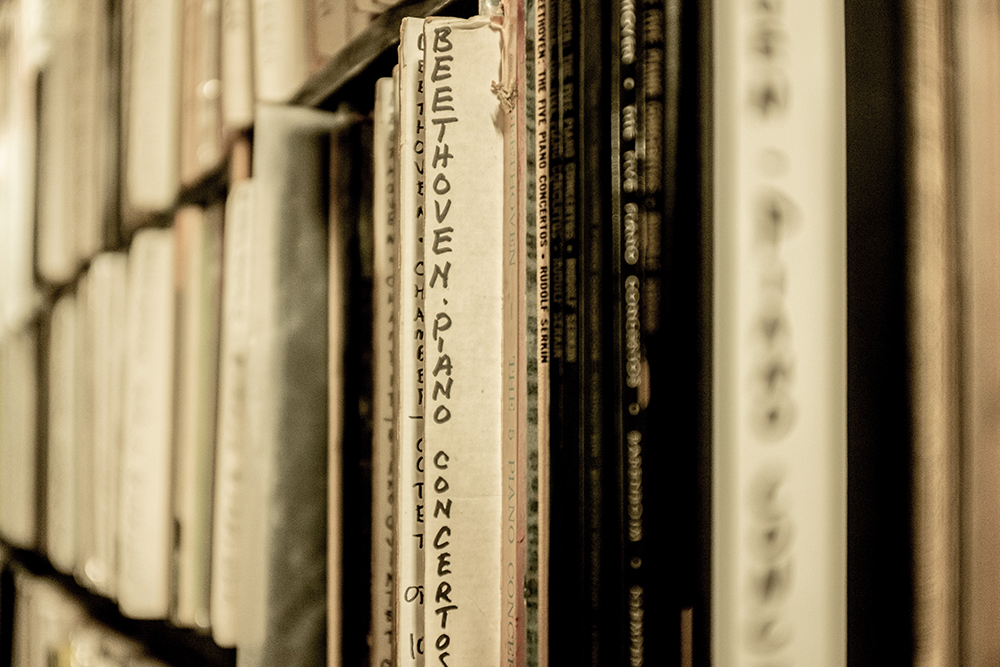

Beethoven’s Finished

Beethoven’s previously unfinished 10th Symphony – in short Beethoven’s Unfinished – has been completed by AI technology. “The work will have its world premiere in Germany next month, 194 years after the composer’s death.” (Classic fm, 28 September 2021) This is what Sophia Alexandra Hall writes on the Classic fm website on 28 September 2021. “The project was started in 2019 by a group made up of music historians, musicologists, composers and computer scientists. Using artificial intelligence meant they were faced with the challenge of ensuring the work remained faithful to Beethoven’s process and vision.” (Classic fm, 28 September 2021) Dr Ahmed Elgammal, professor at the Department of Computer Science, Rutgers University, said that his team “had to use notes and completed compositions from Beethoven’s entire body of work – along with the available sketches from the Tenth Symphony – to create something that Beethoven himself might have written” (Classic fm, 28 September 2021). You can listen to samples here. Whether the German composer would have liked the result, we will unfortunately never know.

Talking with Animals

We use our natural language, facial expressions and gestures when communicating with our fellow humans. Some of our social robots also have these abilities, and so we can converse with them in the usual way. Many highly evolved animals have a language in which there are sounds and signals that have specific meanings. Some of them – like chimpanzees or gorillas – have mimic and gestural abilities comparable to ours. Britt Selvitelle and Aza Raskin, founders of the Earth Species Project, want to use machine learning to enable communication between humans and animals. Languages, they believe, can be represented not only as geometric structures, but also translated by matching their structures to each other. They say they have started working on whale and dolphin communication. Over time, the focus will broaden to include primates, corvids, and others. It would be important for the two scientists to study not only natural language but also facial expressions, gestures and other movements associated with meaning (they are well aware of this challenge). In addition, there are aspects of animal communication that are inaudible and invisible to humans that would need to be considered. Britt Selvitelle and Aza Raskin believe that translation would open up the world of animals – but it could be the other way around that they would first have to open up the world of animals in order to decode their language. However, should there be breakthroughs in this area, it would be an opportunity for animal welfare. For example, social robots, autonomous cars, wind turbines, and other machines could use animal languages alongside mechanical signals and human commands to instruct, warn and scare away dogs, elks, pigs, and birds. Machine ethics has been developing animal-friendly machines for years. Among other things, the scientists use sensors together with decision trees. Depending on the situation, braking and evasive maneuvers are initiated. Maybe one day the autonomous car will be able to avoid an accident by calling out in deer dialect: Hello deer, go back to the forest!

Robots that Spare Animals

Semi-autonomous machines, autonomous machines and robots inhabit closed, semi-closed and open environments, more structured environments like the household or more unstructured environments like cultural landscapes or the wilderness. There they encounter domestic animals, farm animals, working animals, and wild animals. These creatures could be disturbed, displaced, injured, or killed by the machines. Within the context of machine ethics and social robotics, the School of Business FHNW developed several design studies and prototypes for animal-friendly machines, which can be understood as moral and social machines in the spirit of these disciplines. In 2019-20, a team led by Prof. Dr. Oliver Bendel developed a prototype robot lawnmower that can recognize hedgehogs, interrupt its work for them and thus protect them. Every year many of these animals die worldwide because of traditional service robots. HAPPY HEDGEHOG (HHH), as the invention is called, could be a solution to this problem. This article begins by providing an introduction to the background. Then it focuses on navigation (where the machine comes across certain objects that need to be recognized) and thermal and image recognition (with the help of machine learning) of the machine. It also presents obvious weaknesses and possible improvements. The results could be relevant for an industry that wants to market their products as animal-friendly machines. The paper “The HAPPY HEDGEHOG Project” is available here.