From November 2025 to February 2026, Sahan Hatemo of the FHNW School of Computer Science, Dr. Katharina Kühne of the University of Potsdam, and Prof. Dr. Oliver Bendel of the FHNW School of Business are conducting a research study. As part of this project, they are launching a sub-study that includes a short computer-based task and a brief questionnaire. Participants are asked to listen to a series of laughter samples and evaluate whether each one sounds authentic or not. The task involves 50 samples in total and typically takes about ten minutes to complete. Participation is possible via PC, laptop, or smartphone. Before starting, participants should ensure that their device’s sound is turned on and that they are in a quiet, distraction-free environment. The computer-based task and the brief questionnaire can be accessed at research.sc/participant/login/dynamic/3BE7321C-B5FD-4C4B-AF29-9A435EC39944.

Start of the ECHO Project

On October 24, 2025, the kick-off meeting for the ECHO project took place at the FHNW School of Business. Two weeks later, on November 7, the proposal was approved. Project collaborator is BIT student Lucas Chingis Marty, who is writing his thesis on this topic. The initiator is Prof. Dr. Oliver Bendel. ECHO is an MLLM-based chatbot that introduces children, young people, and laypeople to the world of music. It can listen to, describe, and evaluate pieces and songs. To do this, it is equipped with a powerful audio analysis module. It refers to key, melody, and harmony, among other things. ECHO makes music accessible and understandable without requiring any prior knowledge. The aim is to promote curiosity, listening comprehension, and artistic taste. The prototype is expected to be available in February 2026.

GPT-AfterDark is Coming

According to several media reports on 15 October 2025, ChatGPT is set to get an erotic function. This is likely to include features such as dirty talk – via text and voice – but possibly also instructions for all kinds of positions and tips and tricks for sex toys and sex robots. This follows in the footsteps of other chatbots such as Replika. However, these often have an avatar to make them irresistible. This is not the case with ChatGPT, apart from the small round tiles of the GPTs, the “custom versions” that anyone can easily create. Among these, incidentally, is a SexGPT by Dominick Pandolfo – ‘Provides sexual health information’, so quite harmless. Artificial Life’s virtual girlfriend already existed at the turn of the millennium, also in linguistic and visual form. If OpenAI does not improve this, users will build something themselves, which is already being done today, albeit not necessarily in a sexual sense. Meshy AI and Co. can be used to generate and animate three-dimensional avatars. It will be interesting to see whether the German ChatGPT version uses gender language in its erotic function – as it does in the default setting. Some people may find this arousing, others may not. When asked what this version of ChatGPT could be called, the chatbot itself suggested: ChatGPT Red, GPT-AfterDark, or DeepLure. If that doesn’t turn you on, there’s no helping you.

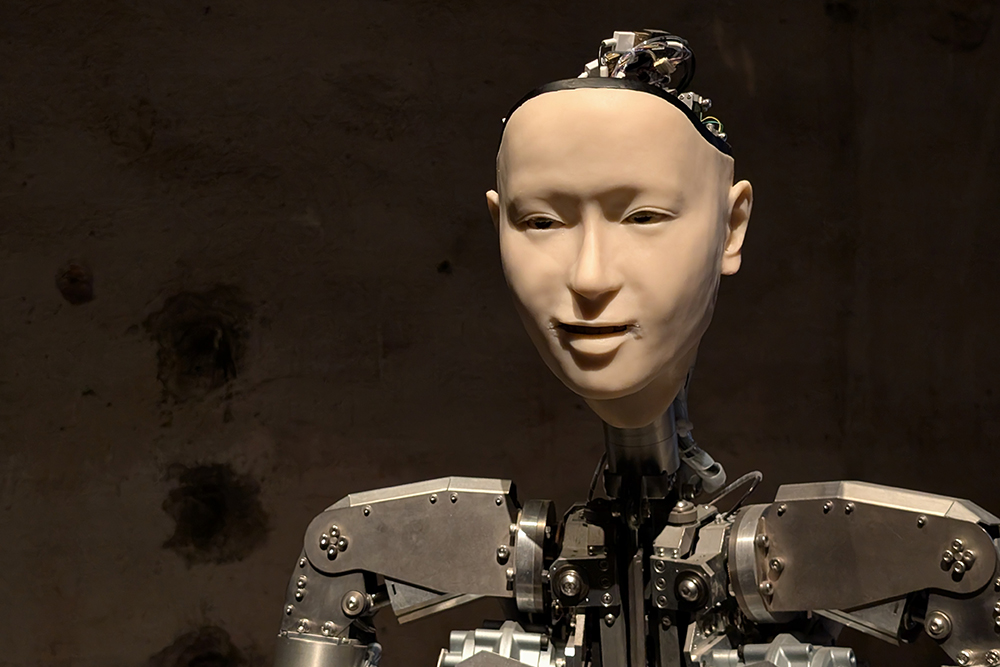

Alter3 in Venice

The installation entitled “Am I a Strange Loop?” will be on display at the 2025 Architecture Biennale in Venice. It raises the question of whether artificial intelligence can develop a form of self-awareness. The installation features the humanoid robot Alter3, which has mimic, gestural, and verbal abilities. It uses GPT-4 or GPT-5. Visitors can communicate with it in different languages via a microphone. The installation draws on ideas from physicist, computer scientist, and cognitive scientist Douglas Hofstadter, who assumed that consciousness arises when a system reflects on itself. Alter3 is an impressive robot with a silicone face and silicone hands, but otherwise has a machine-like presence. GPT-4, GPT-5, or other language models cannot create either world consciousness or self-awareness.

Initial Thoughts on Wearable Social Robots

Wearable social robots are very small yet extremely powerful systems that can be worn around the neck, on the body, or in a shoulder bag or handbag. They are not only companions to humans, but become part of them by expanding their senses and means of expression. The article entitled “This robot suits you well!” (subtitle: “On the phenomenon of wearable social robots”) by Oliver Bendel defines the term “wearable social robots”, presents areas of application, and discusses social and ethical challenges. Recommendations for developers and users are also provided. It becomes clear that wearable social robots represent novel tools and extensions or enhancements of humans, whose capabilities go beyond those of apps on smartphones. The article was published on September 25, 2025, in Wiley Industry News, not only in German but also in English. It can be accessed at www.wileyindustrynews.com/de/fachbeitraege/dieser-roboter-steht-ihnen-aber-gut or www.wileyindustrynews.com/en/contributions/that-robot-suits-you-well.

ICSR + Art 2026 in London

Oliver Bendel on Wearable Social Robots

At the last session of the ICSR on September 12, 2025, Oliver Bendel presented his full paper titled “Wearable Social Robots for the Disabled and Impaired”. He began by defining the term wearable social robots, which he sees as a special form and combination of wearable robots and social robots. One example is AIBI, a small robot that he briefly wore around his neck during the talk. Wearable social robots can include functions for games and entertainment, information and learning, navigation and description, and combating loneliness and anxiety. Potential user groups include pupils and students, prison inmates, astronauts, and disabled and impaired persons. Franziska and Julia demonstrated in videos how they use AIBI as a companion and for social support. With this paper, Oliver Bendel continued his work in the field of inclusive AI and inclusive Robotics. The ICSR is one of the leading conferences for social robotics worldwide, and its 17th edition took place from September 10 to 12, 2025, in Naples, Italy. Mariacarla Staffa (University of Naples Parthenope, Italy), John-John Cabibihan (Qatar University, Qatar), and Bruno Siciliano (University of Naples Federico II) served as the main organizers. Over the course of the three days, 300 participants attended, contributing once again to the advancement of social robotics.

Small Talk with a Robot

At the last day of the ICSR on September 12, 2025, Katharina Kühne presented her full paper titled “Small Talk with a Robot Reduces Stress and Improves Mood” (written together with Antonia L. Z. Klöffel, Oliver Bendel, and Martin H. Fischer). Previous research has shown that social support reduces stress and improves mood. This study tested whether small talk with a social robot could be helpful. After performing a stressful task, 98 participants either chatted with a NAO robot, listened to the robot tell a neutral story, or did not interact with the robot. Both robot interactions reduced stress – particularly small talk, which also boosted positive mood. The effects were stronger in participants with high acute stress. Positive affect played a key role in stress reduction, suggesting that robot-mediated small talk may be a useful tool for providing emotional support. Dr. Katharina Kühne and Prof. Dr. Martin H. Fischer are researchers at the University of Potsdam. Antonia L. Z. Klöffel assists Katharina Kühne as a junior scientist. Martin Fischer is the head of the Potsdam Embodied Cognition Group (PECoG). Prof. Dr. Oliver Bendel is an associated researcher with PECoG.

Anouk Wipprecht at ICSR

On September 12, 2025, Dutch FashionTech designer Anouk Wipprecht gave a keynote speech entitled “The Intersection of Fashion, Robotics and Technology” at ICSR 2025 in Naples. In her presentation, she demonstrated how fashion can become an interactive experience that goes far beyond pure aesthetics by incorporating state-of-the-art technologies and scientific insights. With her designs, Wipprecht explores how clothing can transform not only our perception but also our relationship with the environment. A striking example of this is her Spider Dress, which uses integrated sensors and movable limbs to create a kind of technologically enhanced personal space. She collaborates with renowned partners such as Intel, Google, Microsoft, Samsung, Audi, and Swarovski, employing techniques like machine learning, biomimetic design, and microcontroller-driven animatronics. Her creations breathe, move, and respond to external stimuli – always with the aim of exploring new interfaces between humans and technology, and encouraging her generation to reflect on the future of the human body in digital space.

The Mirokaï

The third and final day of ICSR 2025, September 12, 2025, began with a keynote speech by Jérôme Monceaux entitled “Emotivation by Design: The Mirokaï”. Present was one of the Mirokaï, spectacular robots that roll on a sphere, the female Miroka. Jérôme Monceaux’s keynote traced the evolution of robotics toward more intuitive, inspiring, and joyful interactions. From the iconic Nao and Pepper robots at Aldebaran to the latest generation of social robots, The Mirokaï by Enchanted Tools, his innovative approach reshaped design, mobility, and user experience. He shared his vision for humanoid robots built to support people in everyday life and work. By combining bold engineering with endearing character design, embodied AI, and meaningful storytelling, Jérôme Monceaux and his team created a cohesive hardware and software ecosystem that integrated naturally into social environments – especially in care settings like senior living and pediatric hospitals. The approximately 300 participants watched enthusiastically as the inventor and visionary spoke to Miroka on stage and handed it a bubble flower, which it accepted and used.