The Emmy Noether Research Group “The Phenomenon of Interaction in Human-Machine Interaction” and the Institute of Ethics, History, and Theory of Medicine (LMU Munich) host a lecture series “on some of the pressing issues arising in the context of implementing and using AI in medicine”. “Each date will consist of three short talks by renowned experts in the respective fields followed by a roundtable discussion. All lectures are held online (Zoom) until further notice.” (Website The Philosophy of Human-Machine Interaction) On 19 November 2020 (18.00-19.30) the topic will be “AI in Medical Robotics”. Speakers will be Prof. Dr. Oliver Bendel (University of Applied Sciences and Arts Northwestern Switzerland), Prof. Dr. Manfred Hild (Beuth University of Applied Sciences Berlin) and Dr. Janina Loh (University of Wien). The presentation language is German. More information via interactionphilosophy.wordpress.com.

AI in the Art of Film

“Agence” by Transitional Forms (Toronto) is the first example of a film that uses reinforcement learning to control its animated characters. MIT Technology Review explains this in an article published on October 2, 2020. “Agence was debuted at the Venice International Film Festival last month and was released this week to watch/play via Steam, an online video-game platform. The basic plot revolves around a group of creatures and their appetite for a mysterious plant that appears on their planet. Can they control their desire, or will they destabilize the planet and get tipped to their doom? Survivors ascend to another world.” (MIT Technology Review, 2 October 2020) The film could be another example of how art and artificial intelligence belong together. Its director also expresses himself in this direction: “I am super passionate about artificial intelligence because I believe that AI and movies belong together …” (MIT Technology Review, 2 October 2020). Whether the audience shares the enthusiasm in this case and in other areas, the future must show.

A Spider that Reads the Whole Web

Diffbot, a Stanford startup, is building an AI-based spider that reads as many pages as possible on the entire public web, and extracts as many facts from those pages as it can. “Like GPT-3, Diffbot’s system learns by vacuuming up vast amounts of human-written text found online. But instead of using that data to train a language model, Diffbot turns what it reads into a series of three-part factoids that relate one thing to another: subject, verb, object.” (MIT Technology Review, 4 September 2020) Knowledge graphs – which is what this is all about – have been around for a long time. However, they have been created mostly manually or only with regard to certain areas. Some years ago, Google started using knowledge graphs too. Instead of giving us a list of links to pages about Spider-Man, the service gives us a set of facts about him drawn from its knowledge graph. But it only does this for its most popular search terms. According to MIT Technology Review, the startup wants to do it for everything. “By fully automating the construction process, Diffbot has been able to build what may be the largest knowledge graph ever.” (MIT Technology Review, 4 September 2020) Diffbot’s AI-based spider reads the web as we read it and sees the same facts that we see. Even if it does not really understand what it sees – we will be amazed at the results.

Machine Dance

Which moves go with which song? Should I do the Floss, the Dougie or the Robot? Or should I create a new style? But which one? An AI system could help answer these questions in the future. At least the announcement of a social media platform raises this hope: “Facebook AI researchers have developed a system that enables a machine to generate a dance for any input music. It’s not just imitating human dance movements; it’s creating completely original, highly creative routines. That’s because it uses finely tuned search procedures to stay synchronized and surprising, the two main criteria of a creative dance. Human evaluators say that the AI’s dances are more creative and inspiring than meaningful baselines.” (Website FB) The AI system could inspire dancers when they get stuck and help them to constantly improve. More information via about.fb.com/news/2020/08/ai-dancing-facebook-research/.

Launch of the Interactive AI Magazine

AAAI has announced the launch of the Interactive AI Magazine. According to the organization, the new platform provides online access to articles and columns from AI Magazine, as well as news and articles from AI Topics and other materials from AAAI. “Interactive AI Magazine is a work in progress. We plan to add lot more content on the ecosystem of AI beyond the technical progress represented by the AAAI conference, such as AI applications, AI industry, education in AI, AI ethics, and AI and society, as well as conference calendars and reports, honors and awards, classifieds, obituaries, etc. We also plan to add multimedia such as blogs and podcasts, and make the website more interactive, for example, by enabling commentary on posted articles. We hope that over time Interactive AI Magazine will become both an important source of information on AI and an online forum for conversations among the AI community.” (AAAI Press Release) More information via interactiveaimag.org.

Talking to Harmony

At the end of June 2020, DIE WELT conducted an interview with Prof. Dr. Oliver Bendel about sex robots and love dolls. It was especially about their natural language skills. Particularly owners and users who want to have a relationship are interested in conversations of all kinds, about God and the world and in the sense of “dirty talk”. Companies like Realbotix go very far in this respect. Harmony for example can talk to her partners for hours in a quite convincing way. The engineers experiment with GPT-2, but also with other language models. Kino Coursey, AI boss of Realbotix, deals with this topic in his article “Speaking with Harmony” for the book “Maschinenliebe” (“Machine Love”) which will be released in October. The interview with Oliver Bendel was published on 11 July 2020 in the printed edition of DIE WELT, under the title “Intelligente Sexroboter sind begehrte Gesprächspartner” (already published the day before in the electronic edition, under the title “Was Sexpuppen können” …). In addition, an English version – “Intelligent sex robots are sought-after dialogue partners” – is available.

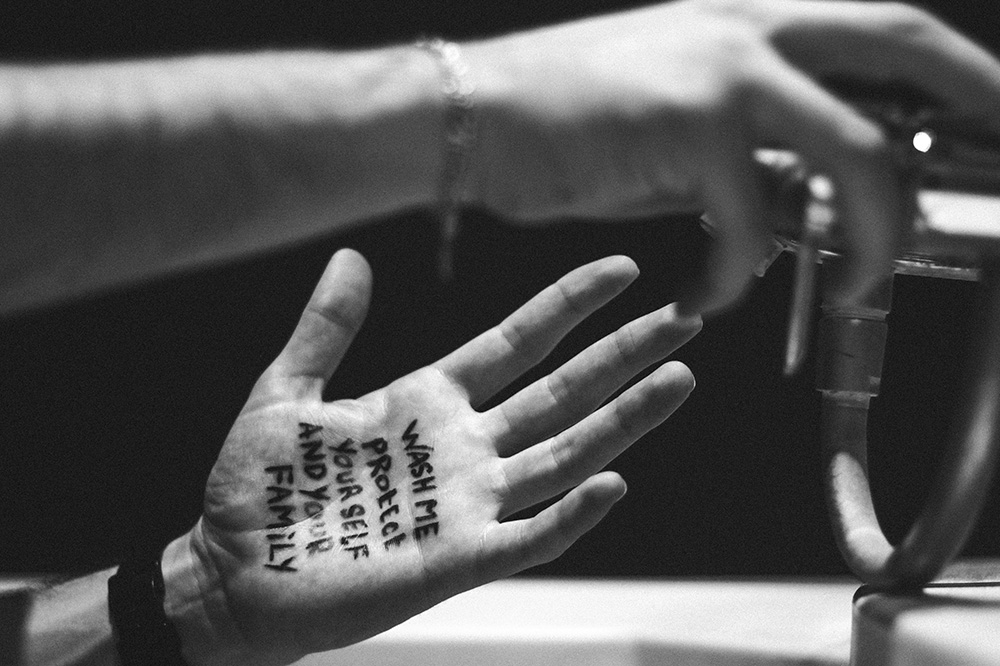

Show Me Your Hands

Fujitsu has developed an artificial intelligence system that could ensure healthcare, hotel and food industry workers scrub their hands properly. This could support the fight against the COVID-19 pandemic. “The AI, which can recognize complex hand movements and can even detect when people aren’t using soap, was under development before the coronavirus outbreak for Japanese companies implementing stricter hygiene regulations … It is based on crime surveillance technology that can detect suspicious body movements.” (Reuters, 19 June 2020) Genta Suzuki, a senior researcher at the Japanese information technology company, told the news agency that the AI can’t identify people from their hands, but it could be coupled with identity recognition technology so companies could keep track of employees’ washing habits. Maybe in the future it won’t be our parents who will show us how to wash ourselves properly, but robots and AI systems. Or they save themselves this detour and clean us directly.

IBM will Stop Developing or Selling Facial Recognition Technology

IBM will stop developing or selling facial recognition software due to concerns the technology is used to support racism. This was reported by MIT Technology Review on 9 June 2020. In a letter to Congress, IBM’s CEO Arvind Krishna wrote: “IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency. We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.” (Letter to Congress, 8 June 2020) The extraordinary letter “also called for new federal rules to crack down on police misconduct, and more training and education for in-demand skills to improve economic opportunities for people of color” (MIT Technology Review, 9 June 2020). A talk at Stanford University in 2018 warned against the return of physiognomy in connection with face recognition. The paper is available here.

A Robot Enforces Social Distancing

According to Gizmodo, a robot from Boston Dynamics has been deployed to a park in Singapore to remind people they should follow social distancing guidelines during the pandemic. Spot is not designed as a security robot, like the K5 or the K3 from Knightscope. But it has other qualities: it can walk on four legs and is very fast. The machine, which was set loose on 8 May 2020 in Bishan-Ang Mo Kio Park, “broadcasts a message reminding visitors they need to stay away from other humans, as covid-19 poses a very serious threat to our health”. It “was made available for purchase by businesses and governments last year and has specially designed cameras to make sure it doesn’t run into things.” (Gizmodo, 8 May 2020) According to a press release from Singapore’s GovTech agency, the cameras will not be able to track or recognize specific individuals, “and no personal data will be collected” (Gizmodo, 8 May 2020). COVID-19 demonstrates that digitization and technologization can be helpful in crises and disasters. Service robots such as security robots, transport robots, care robots and disinfection robots are in increasing demand.

AI as a Secret Weapon Against COVID-19?

Artificial intelligence is underestimated in some aspects, but overestimated in many. It is currently seen as a secret weapon against COVID-19. But it most probably is not. The statement of Alex Engler, a David M. Rubenstein Fellow, is clear: “Although corporate press releases and some media coverage sing its praises, AI will play only a marginal role in our fight against Covid-19. While there are undoubtedly ways in which it will be helpful – and even more so in future pandemics – at the current moment, technologies like data reporting, telemedicine, and conventional diagnostic tools are far more impactful.” (Wired, 26 April 2020) Above all, however, it is social distancing that interrupts the transmission paths and thus curbs the spread of the virus. And it’s drugs that will solve the problem this year or next. So there is a need for behavioural adjustment and medical research. Artificial intelligence is not really needed. Alex Engler identified the necessary heuristics for a healthy skepticism of AI claims around Covid-19 and explained them in Wired magazine.