As part of the AAAI Spring Symposia, the symposium “Will AI Light Up Human Creativity or Replace It?: Toward Well-Being AI for co-evolving human and machine intelligence” focuses on how advances in generative AI, large language models, and multi-agent systems are transforming human creativity and decision-making. It addresses the central question of whether AI will amplify human potential or increasingly take its place. The symposium advances the idea of Well-Being AI, emphasizing human-AI collaboration and co-evolution rather than AI as an isolated or autonomous system. While highlighting the potential of AI to support creativity, discovery, and personal development, it also examines risks such as overreliance, reduced diversity of thought, and loss of human autonomy. Chaired by Takashi Kido (see photo) of Teikyo University and Keiki Takadama of The University of Tokyo, the symposium brings together researchers and practitioners from technical, philosophical, and social disciplines to discuss principles and frameworks for AI that augments rather than replaces human creativity. Further information on this symposium and the broader AAAI Spring Symposia organized by the Association for the Advancement of Artificial Intelligence can be found at https://aaai.org/conference/spring-symposia/sss26/ and webpark2506.sakura.ne.jp/aaai/sss26-will-ai-light-up-human-creativity-or-replace-it/.

Towards Inclusive AI and Inclusive Robotics

The article “Wearable Social Robots for the Disabled and Impaired” by Oliver Bendel was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part III.” From the abstract: “Wearable social robots can be found on a chain around the neck, on clothing, or in a shirt or jacket pocket. Due to their constant availability and responsiveness, they can support the disabled and impaired in a variety of ways and improve their lives. This article first identifies and summarizes robotic and artificial intelligence functions of wearable social robots. It then derives and categorizes areas of application. Following this, the opportunities and risks, such as those relating to privacy and intimacy, are highlighted. Overall, it emerges that wearable social robots can be useful for this group, for example, by providing care and information anywhere and at any time. However, significant improvements are still needed to overcome existing shortcomings.” The technology philosopher presented the paper on September 12, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2398-6_8.

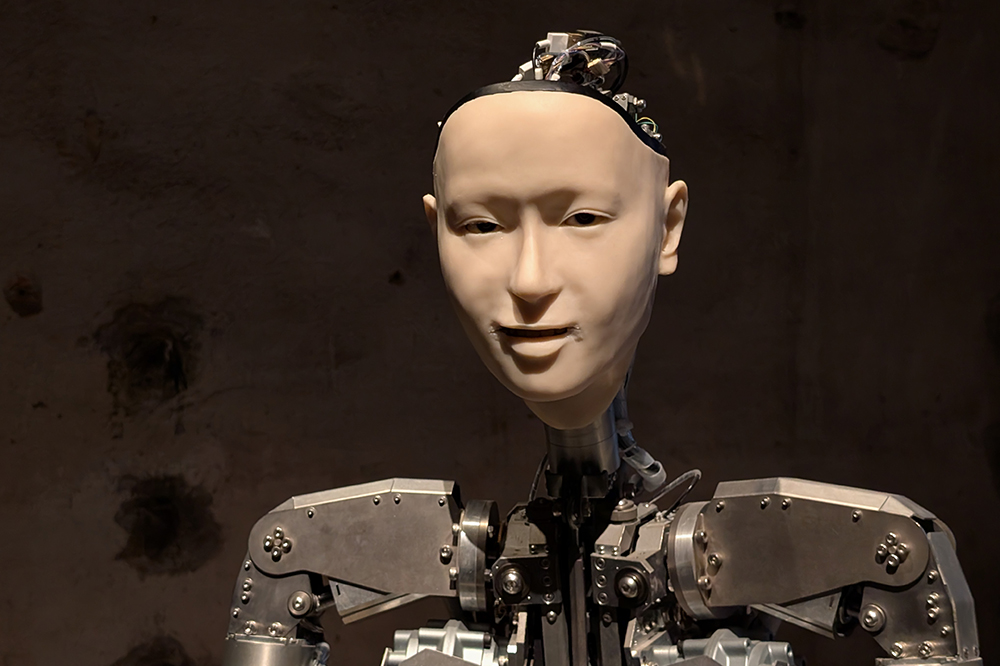

Human-likeness in AI

The Research Topic “Exploring human-likeness in AI: From perception to ethics and interaction dynamics”, hosted by Frontiers in Cognition, invites contributions on how human-like features in robots and AI systems influence user perception, trust, interaction, and ethical considerations. The deadline for submission has been extended. As AI becomes more integrated into society, anthropomorphic design raises pressing questions: Do human-like traits improve communication and acceptance, or do they lead to unrealistic expectations? What ethical implications arise when machines simulate empathy or emotion? This interdisciplinary call welcomes contributions from fields such as psychology, engineering, philosophy, and education. Submissions may include empirical research, theoretical analysis, reviews, or case studies that explore how human-likeness shapes the way we engage with AI. The deadline for manuscript summaries is February 12, 2026; full manuscripts are due by March 12, 2026. Articles will undergo peer review and are subject to publication fees upon acceptance. Topic editors are Dr. Katharina Kühne (University of Potsdam, Germany) and Prof. Dr. Roger K. Moore (The University of Sheffield, United Kingdom). For full details and submission guidelines, visit: www.frontiersin.org/research-topics/72370/exploring-human-likeness-in-ai-from-perception-to-ethics-and-interaction-dynamics.

AAAI 2026 Spring Symposium Series

On September 4, 2025, the Association for the Advancement of Artificial Intelligence (AAAI) announced the continuation of the AAAI Spring Symposium Series. The symposium will be held from April 7–9, 2026, at the Hyatt Regency San Francisco Airport in Burlingame, California. The call for proposals for the symposium series is available on its website. According to the organizers, proposals are due October 24, 2025, and early submissions are encouraged. “The Spring Symposium Series is an annual set of meetings run in parallel at a common site. It is designed to bring colleagues together in an intimate forum while at the same time providing a significant gathering point for the AI community. The two and one-half day format of the series allows participants to devote considerably more time to feedback and discussion than typical one-day workshops. It is an ideal venue for bringing together new communities in emerging fields.” (AAAI website). As was the case this year, the Spring Symposium Series will once again not be held on the Stanford University campus. For many years, the History Corner served as the traditional venue for the event. Efforts to secure an alternative university location in the Bay Area have been unsuccessful. AAAI should seriously consider returning to Stanford in 2027. Only then can the Spring Symposium Series regain the atmosphere and significance it once enjoyed.

How Human-Like Should It Be?

The Research Topic “Exploring human-likeness in AI: From perception to ethics and interaction dynamics”, hosted by Frontiers in Cognition, invites submissions on how human-like features in robots and AI systems influence user perception, trust, interaction, and ethical considerations. As AI becomes more integrated into society, anthropomorphic design raises pressing questions: Do human-like traits improve communication and acceptance, or do they lead to unrealistic expectations? What ethical implications arise when machines simulate empathy or emotion? This interdisciplinary call welcomes contributions from fields such as psychology, engineering, philosophy, and education. Submissions may include empirical research, theoretical analysis, reviews, or case studies that explore how human-likeness shapes the way we engage with AI. The deadline for manuscript summaries is September 22, 2025; full manuscripts are due by January 10, 2026. Articles will undergo peer review and are subject to publication fees upon acceptance. Topic editors are Dr. Katharina Kühne (University of Potsdam, Germany) and Prof. Dr. Roger K. Moore (The University of Sheffield, United Kingdom). For full details and submission guidelines, visit: www.frontiersin.org/research-topics/72370/exploring-human-likeness-in-ai-from-perception-to-ethics-and-interaction-dynamics.

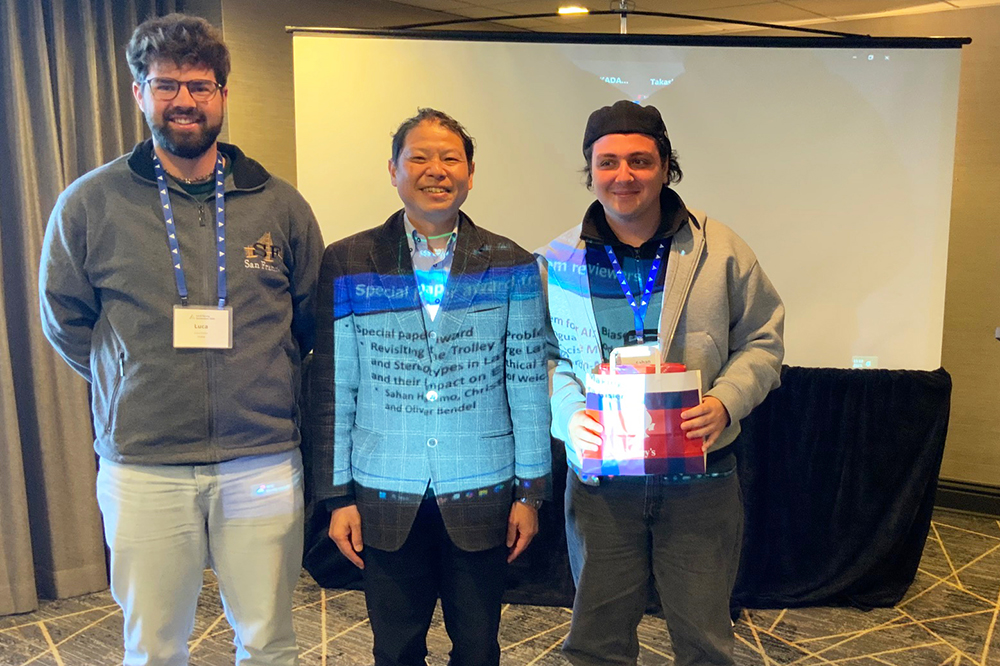

Revisiting the Trolley Problem for AI

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Şahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel received the Special Paper Award from the reviewers of the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The three bachelor students from the FHNW School of Computer Science gave their presentation on site in San Francisco as part of the AAAI 2025 Spring Symposia. Prof. Dr. Bendel from the FHNW School of Business had given them feedback on the project and placed it in the context of machine ethics. He has been involved in the well-known and popular conference, which usually takes place at Stanford University, since 2016. This time, he was keeping his fingers crossed from Italy. The proceedings of the AAAI 2025 Spring Symposia will be published shortly.

Sponsorship for the ICSR 2025

Join us as a sponsor for the 17th International Conference on Social Robotics & AI, taking place on 10-12 September 2025 at Naples, Italy. This prestigious event brings together researchers, leading experts, innovators, and thought leaders in the fields of robotics, AI, and human-robot interaction. Don’t miss the opportunity to align your brand with cutting-edge advancements. We offer different sponsorship/exhibition packages, all information is available at the page: icsr2025.eu/sponsor2/. If you are interested or have any questions, don’t hesitate to contact us at info@icsr2025.eu with the subject line tag [Sponsorship]. The ICSR is one of the leading conferences for social robotics worldwide. Participants will meet for two days at the Parthenope University of Naples and for the third day at the Città della Scienza conference center. More information on icsr2025.eu.

About Inclusive AI

The Gabler Wirtschaftslexikon is the largest economics encyclopedia in the German-speaking world. In March 2025, Oliver Bendel published an article on inclusive AI in this reference work. The first part reads as follows: “Inclusive AI aims, on the one hand, to combat phenomena in artificial intelligence (AI) that have an exclusionary character – such as bias, hallucination, hate speech, and deepfakes – and, on the other hand, to strengthen applications with an inclusionary character in order to support those affected. One meaning is associated with terms like “responsible AI”, “explainable AI”, and “trustworthy AI”, while the other aligns with concepts such as “AI for good” or “AI for wellbeing”. Overall, this falls under the umbrella of “ethical AI”, though this term is also used in marketing contexts. In 2025, the World Economic Forum (WEF) pointed to the digital divide, asking: “How do we ensure that the growth of AI doesn’t leave people behind and is truly inclusive?” Inclusive AI can be associated with generative AI as well as with other forms of artificial intelligence.” The full article is available at:

wirtschaftslexikon.gabler.de/definition/inclusive-ai-171870.

A Video About the ICSR 2023

ICSR is one of the leading conferences for social robotics worldwide. The 17th edition will take place from 10 to 12 September 2025 in Naples, Italy. Participants will meet for two days at the Parthenope University of Naples and for the third day at the Città della Scienza conference center. In 2023, the conference took place in Doha, Qatar. A video on YouTube by Hooman Samani provides insights into the presentations and events: www.youtube.com/watch?v=MtgM8pTPw8c … It not only shows that numerous top-class presentations take place, but also that the members of the community are highly motivated and have a lot of fun. The high level of internationality and diversity of the conference should also be emphasized. The conference website of ICSR 2025 is online since January: icsr2025.eu.

Human Stuff from AI Characters

“Left to their own devices, an army of AI characters didn’t just survive – they thrived. They developed in-game jobs, shared memes, voted on tax reforms and even spread a religion.” (MIT Technology Review, 27 November 2024) This was reported by MIT Technology Review in an article on November 27. “The experiment played out on the open-world gaming platform Minecraft, where up to 1000 software agents at a time used large language models (LLMs) to interact with one another. Given just a nudge through text prompting, they developed a remarkable range of personality traits, preferences and specialist roles, with no further inputs from their human creators.” (MIT Technology Review, 27 November 2024) According to the magazine, the work by AI startup Altera is part of a broader field that seeks to use simulated agents to model how human groups would react to new economic policies or other interventions. The article entitled “These AI Minecraft characters did weirdly human stuff all on their own” can be accessed here.