On October 24, 2025, the kick-off meeting for the ECHO project took place at the FHNW School of Business. Two weeks later, on November 7, the proposal was approved. Project collaborator is BIT student Lucas Chingis Marty, who is writing his thesis on this topic. The initiator is Prof. Dr. Oliver Bendel. ECHO is an MLLM-based chatbot that introduces children, young people, and laypeople to the world of music. It can listen to, describe, and evaluate pieces and songs. To do this, it is equipped with a powerful audio analysis module. It refers to key, melody, and harmony, among other things. ECHO makes music accessible and understandable without requiring any prior knowledge. The aim is to promote curiosity, listening comprehension, and artistic taste. The prototype is expected to be available in February 2026.

GPT-AfterDark is Coming

According to several media reports on 15 October 2025, ChatGPT is set to get an erotic function. This is likely to include features such as dirty talk – via text and voice – but possibly also instructions for all kinds of positions and tips and tricks for sex toys and sex robots. This follows in the footsteps of other chatbots such as Replika. However, these often have an avatar to make them irresistible. This is not the case with ChatGPT, apart from the small round tiles of the GPTs, the “custom versions” that anyone can easily create. Among these, incidentally, is a SexGPT by Dominick Pandolfo – ‘Provides sexual health information’, so quite harmless. Artificial Life’s virtual girlfriend already existed at the turn of the millennium, also in linguistic and visual form. If OpenAI does not improve this, users will build something themselves, which is already being done today, albeit not necessarily in a sexual sense. Meshy AI and Co. can be used to generate and animate three-dimensional avatars. It will be interesting to see whether the German ChatGPT version uses gender language in its erotic function – as it does in the default setting. Some people may find this arousing, others may not. When asked what this version of ChatGPT could be called, the chatbot itself suggested: ChatGPT Red, GPT-AfterDark, or DeepLure. If that doesn’t turn you on, there’s no helping you.

Manipulated Chatbots as Munchausen Machines

In 2013, Prof. Dr. Oliver Bendel came up with the idea for his LIEBOT, also known as Lügenbot. On September 11, 2013, he published an article titled “Der Lügenbot und andere Münchhausen-Maschinen” in the magazine CyberPress. More articles and contributions followed until a prototype was implemented in 2016. Kevin Schwegler, then a student of the philosopher of technology, was responsible for this work. He developed a chatbot that transformed truthful statements into false ones using seven different strategies. In the summer of 2016, for example, LIEBOT claimed that Donald Trump was the President of the United States. To make this statement, it had used information from Yahoo in a multi-step process. The results of the project were documented in a paper titled “Towards Kant Machines” and presented in March 2017 at the AAAI Spring Symposia at Stanford University. One might argue that LIEBOT does not have intentions of its own and therefore does not lie in the strict sense. However, this intent was programmed into it. In a way, it lies on behalf of its creators. With this project, Oliver Bendel wanted to demonstrate that it is possible to build dialogue systems capable of spreading falsehoods. Today, such systems seem to be omnipresent in the form of LLMs. However, one has to look closely to discern the differences. In his book “300 Keywords Generative KI”, Oliver Bendel writes: “Hallucinating machines do not necessarily qualify as Munchausen machines in the strict sense, since there is no intent – or at least intent can hardly be proven.” Manipulated LLM-based chatbots, on the other hand, come very close to LIEBOT. ChatGPT and similar systems pursue a political agenda and exhibit an ideological tendency.

Taming the Lion of the LLM

The paper “Miss Tammy as a Use Case for Moral Prompt Engineering” by Myriam Rellstab and Oliver Bendel from the FHNW School of Business was accepted at the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. It describes the development of a chatbot that can be available to pupils and de-escalate their conflicts or promote constructive dialogues among them. Prompt engineering – called moral prompt engineering in the project – and retrieval-augmented generation (RAG) were used. The centerpiece is a collection of netiquettes. On the one hand, these control the behavior of the chatbot – on the other hand, they allow it to evaluate the behavior of the students and make suggestions to them. Miss Tammy was compared with a non-adapted standard model (GPT-4o) and performed better than it in tests with 14- to 16-year-old pupils. The project applied the discipline of machine ethics, in which Oliver Bendel has been researching for many years, to large language models, using the netiquettes as a simple and practical approach. The eight AAAI Spring Symposia will not be held at Stanford University this time, but at the San Francisco Airport Marriott Waterfront, Burlingame, from March 31 to April 2, 2025. It is a conference rich in tradition, where innovative and experimental approaches are particularly in demand.

22 Chatbots and Voice Assistants

Since 2013, Oliver Bendel has developed 22 chatbots and voice assistants together with his students or colleagues. They can be divided into three categories. The first are moral and immoral chatbots (i.e., forms of moral machines) and empathic voice assistants. The second are chatbots (some with voice output) for dead, endangered, or extinct languages and idioms. The third are pedagogical chatbots and chatbots that give recommendations and advice. Some of the projects lasted between four and six months. Most of the GPTs were created in just a few hours. Exceptions are Miss Tammy and Animal Whisperer, which took several months to build with the help of prompt engineering and retrieval-augmented generation (RAG). Articles and book chapters have been published on many of the projects. The names of the developers can be found in these. A few chatbots made it into the media, such as GOODBOT (for which the preparatory work began in 2012), LÜGENBOT aka LIEBOT, and @llegra.

kAIxo says “kaixo”

The final presentation of the “kAIxo” project took place on January 9, 2025. Nicolas Lluis Araya was the project team member. The FHNW School of Business has been developing chatbots for dead, endangered, and extinct languages for several years. A well-known example is @llegra, a chatbot for Vallader. In the spring of 2024, Oliver Bendel tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 12, 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the “kAIxo” project (the Basque “kaixo” corresponds to the English “hello”), the chatbot kAIxo was built, which speaks Basque. Its purpose is to keep users practicing written or spoken language or to develop the desire to learn the endangered language. The chatbot is based on GPT-4o and Gemini 1.5 Flash, and the user can select his or her preferred large language model (LLM). Retrieval-augmented Generation (RAG) plays a central role. The ChatSubs dataset is used, which contains subtitles of movie dialogs in Basque. Thanks to a text-to-speech engine, the chatbot can also speak. At the final presentation, Nicolas Lluis Araya presented a working prototype that can be accessed via www.kaixo.ch.

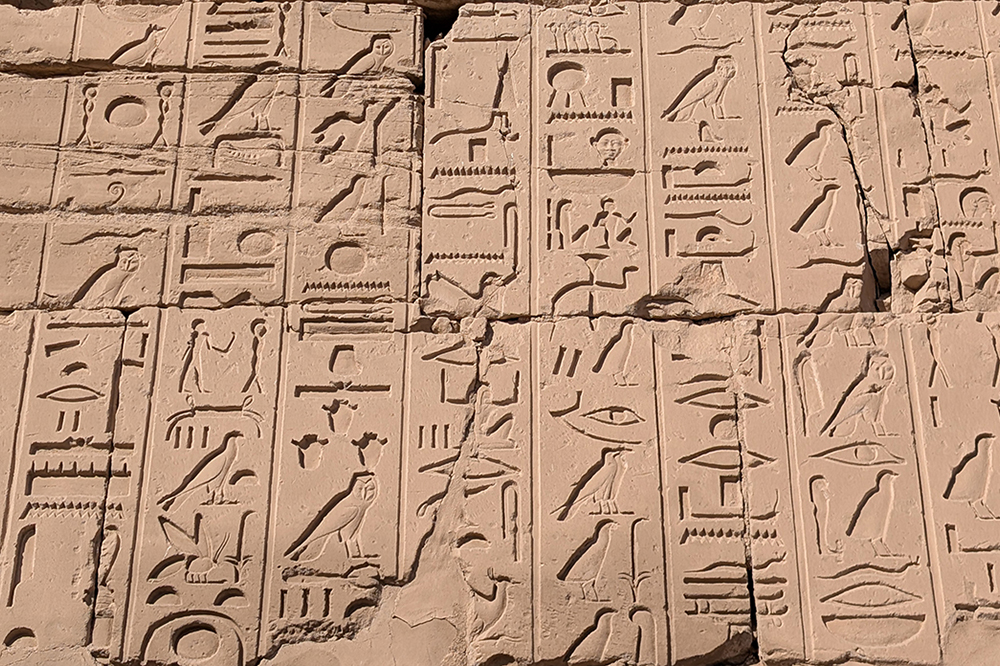

Cleop@tr@ Visits the Karnak Temple

Cleop@tr@ was invented by Prof. Dr. Oliver Bendel in May 2024. It is a GPT that specializes in Egyptian. It is also familiar with the culture and history of ancient Egypt. Since 2012, the technology philosopher and information systems specialist has been building chatbots and voice assistants – partly with his students and partly on his own. These have been discussed by the media and found interesting by NASA. Under his supervision, Karim N’diaye developed the chatbot @ve for Latin, Dalil Jabou the voice-enhanced chatbot @llegra for Vallader, and Nicolas Lluis Araya the voice-enhanced chatbot kAIxo for Basque. For some time now, he has been testing the reach of GPTs for endangered languages such as Irish, Maori, and Basque. He is also investigating the potential for extinct languages such as Egyptian (Cleop@tr@) and Akkadian (H@mmur@pi). The GPTs do not readily communicate in hieroglyphics and cuneiform, but they can certainly represent and explain signs of visual languages. It is even possible to enter entire sentences and then ask how they can be improved or what they mean. In December 2024, Oliver Bendel tested his Cleop@tr@ in the Karnak Temple in Luxor. She was able to provide coherent explanations and translations for several inscriptions on columns and walls. However, further tests also revealed clear errors. Ultimately, Egyptologists will have to assess how reliable it is.

The kAIxo Project

The interim presentation of the kAIxo project took place on 11 November 2024. Nicolas Lluis Araya is the project collaborator. Chatbots for dead, endangered, and extinct languages are being developed at the FHNW School of Business. One well-known example is @llegra, a chatbot for Vallader. Oliver Bendel recently tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material available for them. On 12 May 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the “kAIxo” project (the Basque “kaixo” corresponds to the English “hello”), the chatbot or voice assistant kAIxo is being built to speak Basque. Its purpose is to keep users practising written or spoken language or to develop the desire to learn the endangered language. The chatbot is based on GPT-4o. Retrieval-Augmented Generation (RAG) plays a central role. A ChatSubs dataset is used, which contains dialogues in Spanish and three other official Spanish languages (Catalan, Basque, and Galician). Nicolas Lluis Araya presented a functioning prototype at the interim presentation. This is now to be expanded step by step.

Start of the kAIxo Project

Chatbots for dead, endangered, and extinct languages are being developed at the FHNW School of Business. One well-known example is @llegra, a chatbot for Vallader. Oliver Bendel recently tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 12, 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the kAIxo project (the Basque “kaixo” corresponds to the english “hello”), the chatbot or voice assistant kAIxo is to be developed that speaks Basque. The purpose is to keep users practicing written or spoken language or to develop the desire to learn the endangered language. The chatbot should be based on a Large Language Model (LLM). Both prompt engineering and fine-tuning are conceivable for customization. Retrieval Augmented Generation (RAG) can play a central role. The result will be a functioning prototype. Nicolas Lluis Araya, a student of business informatics, has been recruited to implement the project. The kick-off meeting will take place on September 3, 2024.

Teaching and Learning with GPTs

In the spring semester of 2024, Prof Dr Oliver Bendel integrated virtual tutors into his teaching. These were ‘custom versions of ChatGPT’, so-called GPTs. Social Robotics Girl was available for the elective modules on social robotics, created in November 2023, and Digital Ethics Girl from February 2024 for the compulsory modules “Ethik und Recht” and ‘Ethics and Law’ within the Wirtschaftsinformatik and Business Information Technology degree programmes (FHNW School of Business) and “Recht und Ethik” within Geomatics (FHNW School of Architecture, Construction and Geomatics). The virtual tutors have the “world knowledge” of GPT-4, but also the specific expertise of the technology philosopher and business information scientist from Zurich. It has been shown that the GPTs can provide certain impulses and loosen up the lessons. They show their particular strength in group work, where students no longer have to consult their lecturer’s books – which is hardly useful when there is a lot of time pressure – but can ask them specific questions. Last but not least, there are opportunities for self-regulated learning. The paper “How Can GenAI Foster Well-being in Self-regulated Learning?” by Stefanie Hauske and Oliver Bendel was published in May 2024 – it was submitted to the AAAI Spring Symposia in December 2023 and presented at Stanford University at the end of March 2024.