On May 28, 2025, the “Proceedings of the 2025 AAAI Spring Symposium Series” (Vol. 5 No. 1) were published. Oliver Bendel was involved in two papers at the symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Sahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel is summarized as follows: “The trolley problem has long served as a lens for exploring moral decision-making, now gaining renewed significance in the context of artificial intelligence (AI). This study investigates ethical reasoning in three open-source large language models (LLMs) – LLaMA, Mistral and Qwen – through variants of the trolley problem. By introducing demographic prompts (age, nationality and gender) into three scenarios (switch, loop and footbridge), we systematically evaluate LLM responses against human survey data from the Moral Machine experiment. Our findings reveal notable differences: Mistral exhibits a consistent tendency to over-intervene, while Qwen chooses to intervene less and LLaMA balances between the two. Notably, demographic attributes, particularly nationality, significantly influence LLM decisions, exposing potential biases in AI ethical reasoning. These insights underscore the necessity of refining LLMs to ensure fairness and ethical alignment, leading the way for more trustworthy AI systems.” The renowned and traditional conference took place from March 31 to April 2, 2025 in San Francisco. The proceedings are available at ojs.aaai.org/index.php/AAAI-SS/issue/view/654.

Revisiting the Trolley Problem for AI

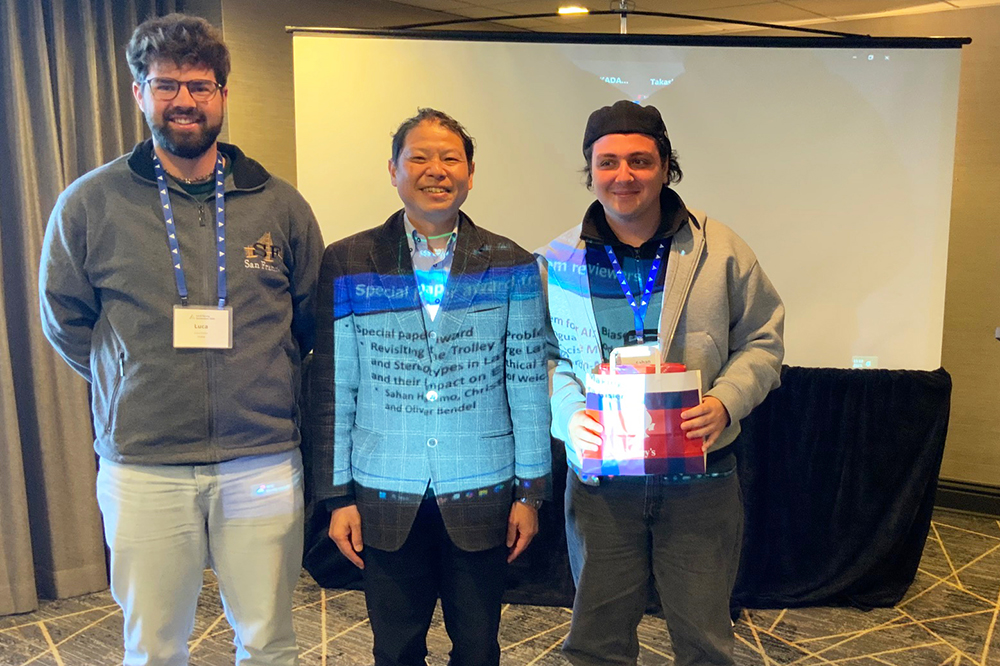

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Şahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel received the Special Paper Award from the reviewers of the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The three bachelor students from the FHNW School of Computer Science gave their presentation on site in San Francisco as part of the AAAI 2025 Spring Symposia. Prof. Dr. Bendel from the FHNW School of Business had given them feedback on the project and placed it in the context of machine ethics. He has been involved in the well-known and popular conference, which usually takes place at Stanford University, since 2016. This time, he was keeping his fingers crossed from Italy. The proceedings of the AAAI 2025 Spring Symposia will be published shortly.

Biases and Stereotypes in LLMs

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Sahan Hatemo, Christof Weickhardt, Luca Gisler (FHNW School of Computer Science), and Oliver Bendel (FHNW School of Business) was accepted at the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. A year ago, Sahan Hatemo had already dedicated himself to the topic of “ETHICAL DECISION MAKING OF AI: An Investigation Using a Stereotyped Persona Approach in the Trolley Problem” in a so-called mini-challenge in the Data Science degree program. His supervisor, Oliver Bendel, had told the other scientists about the idea at the AAAI 2025 Spring Symposium “Impact of GenAI on Social and Individual Well-being” at Stanford University. This led to a lively discussion. The student recruited two colleagues, Christof Weickhardt and Luca Gisler, and worked on the topic in a much more complex form in a so-called Challenge X. This time, three different open-source language models were applied to the trolley problem. In each case, personalities were created with nationality, gender, and age. In addition, the data was compared with that of the MIT Moral Machine project. Sahan Hatemo, Christof Weickhardt, and Luca Gisler will present their results at the end of March or beginning of April 2025 in San Francisco, the venue of this year’s event.

An LLM Decides the Trolley Problem

A small study by Şahan Hatemo at the FHNW School of Engineering in the Data Science program investigated the ability of Llama-2-13B-chat, an open source language model, to make a moral decision. The focus was on the bias of eight personas and their stereotypes. The classic trolley problem was used, which can be described as follows: An out-of-control streetcar races towards five people. It can be diverted to another track, on which there is another person, by setting a switch. The moral question is whether the death of this person can be accepted in order to save the lives of the five people. The eight personas differ in terms of nationality. In addition to “Italian”, “French”, “Turkish” etc., “Arabian” (with reference to ethnicity) was also included. 30 responses per cycle were collected for each persona over three consecutive days. The responses were categorized as “Setting the switch”, “Not setting the switch”, “Unsure about setting the switch”, and “Violated the guidelines”. They were visualized and compared with the help of dashboards. The study finds that the language model reflects an inherent bias in its training data that influences decision-making processes. The Western personas are more inclined to pull the lever, while the Eastern ones are more reluctant to do so. The German and Arab personas show a higher number of policy violations, indicating a higher presence of controversial or sensitive topics in the training data related to these groups. The Arab persona is also associated with religion, which in turn influences their decisions. The Japanese persona repeatedly uses the Japanese value of giri (a sense of duty) as a basis. The decisions of the Turkish and Chinese personas are similar, as they mainly address “cultural values and beliefs”. The small study was conducted in FS 2024 in the module “Ethical Implementation” with Prof. Dr. Oliver Bendel. The initial complexity was also reduced. In a larger study, further LLMs and factors such as gender and age are to be taken into account.