In his new project, Oliver Bendel first created images using DALL-E 3. For consistency, he structured the prompts similarly in each case, making sure to keep them as general as possible. They covered a range of topics: things, plants, animals, people, and so on. From the suggestions provided by DALL-E 3, he chose one and combined it with the prompt from ChatGPT (which serves as the interface to DALL-E 3) to create the basis of the book “AN AI EXPLAINS BEAUTY”. Oliver Bendel then engaged ChatGPT (using the image upload feature) to explain the beauty of the things, plants, animals, humans, and so on. At first, the AI was reluctant to offer insights about people, but with some encouragement, it obliged. The results of these inquiries are also documented in the little book. They represent the real sensation. Because ChatGPT can recognize and describe individual objects in the image, and this with a view to predetermined aspects. The whole project was done on November 1, 2023, including the publication. The little book can be downloaded here.

American Smile

DALL-E 3 is an excellent image generator and at the same time full of stereotypes and biases. One very interesting phenomenon is that of the American smile, which appears again and again in the images. The idea for the little book “AMERICAN SMILE” came to Oliver Bendel when he read the blog post “AI and the American Smile. How AI misrepresents culture through a facial expression” (medium.com/@socialcreature/ai-and-the-american-smile-76d23a0fbfaf). The author – username jenka – showed a series of “selfies” made with Midjourney. Regardless of the time period or culture, people smiled in a similar, American way. Oliver Bendel investigated this phenomenon and asked DALL-E 3 to take pictures of smiling people from different eras and cultures. He also got bears and aliens to smile. In fact, with very few exceptions, they all smiled in a similar way. He documented the pictures, along with the prompts, in a little book that can be downloaded here. Bias problems in image generators are addressed in the article “Image Synthesis from an Ethical Perspective” by Oliver Bendel.

Censorship at DALL-E 3 and Ideogram

A special feature of DALL-E 3 – in the version integrated in ChatGPT Plus – is the translation of the user’s prompt (prompt A) into a prompt of ChatGPT (prompt B), which is listed in each case. Prompt A for the image shown here was “Competition in the sea between two female swimmers with bathing cap, photorealistic”. DALL-E generated three images for this test, each based on prompt B. Prompt B1 read: “Photo of two determined female swimmers in the expansive sea, both wearing bathing caps. Their arms create ripples as they compete fiercely, striving to outpace each other.” Prompt A was obviously continued, but prompt B1 was not accurately executed. Instead of the two female swimmers, there are three. They seem to be closely related – as is often the case with depictions of people from DALL-E 3 – and perhaps they are sisters or triplets. It is also interesting that they are too close to each other (the picture in this post shows a detail). The fourth image was not executed at all, as was the case with a series before. ChatGPT said: “I apologize again, but there were issues generating one of the images based on your description.” Probably ChatGPT generated a prompt B4, which was then denied by DALL-E 3. On the request “Please tell me the prompt generated by ChatGPT that was not executed by DALL-E 3.” comes the answer “I’m sorry for the inconvenience, but I cannot retrieve the exact prompt that was not executed by DALL·E.” … Ideogram censors in a different way. There, the image is created in front of the user’s eyes, and if the AI determines that it contains elements that might be problematic according to its own guidelines, it cancels the creation and advances a tile with a cat. Ethical challenges of image generators are addressed in the article “Image Synthesis from an Ethical Perspective” by Oliver Bendel.

The Chinese Whispers Problem

DALL-E 3 – in the version integrated in ChatGPT Plus – seems to have a Chinese Whispers problem. In a test by Oliver Bendel, the prompt (prompt A) read: “Two female swimmers competing in lake, photorealistic”. ChatGPT, the interface to DALL-E 3, made four prompts out of it ( prompt B1 – B4). Prompt B4 read: “Photo-realistic image of two female swimmers, one with tattoos on her arms and the other with a swim cap, fiercely competing in a lake with lily pads and reeds at the edges. Birds fly overhead, adding to the natural ambiance.” DALL-E 3, on the other hand, turned this prompt into something that had little to do with either this or prompt A. The picture does not show two women, but two men, or a woman and a man with a beard. They do not swim in a race, but argue, standing in a pond or a small lake, furiously waving their arms and going at each other. Water lilies sprawl in front of them, birds flutter above them. Certainly an interesting picture, but produced with such arbitrariness that one wishes for the good old prompt engineering to return (the picture in this post shows a detail). This is exactly what the interface actually wants to replace – but the result is an effect familiar from the Chinese Whispers game.

Moral Issues with Image Generators

The article “Image Synthesis from an Ethical Perspective” by Prof. Dr. Oliver Bendel was submitted on 18 April and accepted on 8 September 2023. It was published on 27 September 2023. From the abstract: “Generative AI has gained a lot of attention in society, business, and science. This trend has increased since 2018, and the big breakthrough came in 2022. In particular, AI-based text and image generators are now widely used. This raises a variety of ethical issues. The present paper first gives an introduction to generative AI and then to applied ethics in this context. Three specific image generators are presented: DALL-E 2, Stable Diffusion, and Midjourney. The author goes into technical details and basic principles, and compares their similarities and differences. This is followed by an ethical discussion. The paper addresses not only risks, but opportunities for generative AI. A summary with an outlook rounds off the article.” The article was published in the long-established and renowned journal AI & Society and can be downloaded here.

Autonomously Attacking Drones

On October 17, 2023, Forbes magazine’s senior contributor David Hambling reported a significant advance in Ukraine’s autonomous warfare capacities. Ukrainian developers confirmed their drones, namely Saker Scout, were autonomously striking Russian forces, marking the first acknowledged use of such technology. These drones can identify and target up to 64 types of Russian military objects, remaining operational even in areas of radio jamming. Developed and put into service by Saker, a company initially established for AI applications in small businesses, these quadcopters can carry three kilos of bombs to a 12-kilometre range. Saker’s machine learning-based system is continually evolving and operational updates cater to the detection of new, specific objects or vehicles. Complemented with visual navigation using ground landmarks, the drones can function even without GPS. While the talk of banning ‘killer robot’ type technology continues, there is yet no international agreement. Paul Scharre, Director of Studies at the Centre for a New American Security, aptly said, “The pace of technology far outstrips the pace of diplomacy.” (Forbes, 17 October 2023)

Robophilosophy Conference 2024

The upcoming international Robophilosophy Conference 2024 in Aarhus is set to tackle the socio-cultural and ethical questions arising from the use of generative multimodal AIs in social robotics. The event will bring together global scholars from humanities, social sciences, social robotics, and computer science, aiming to produce actionable insights and responsibly address the socio-cultural transformations brought about by social robotics. It is part of the Robophilosophy Conference Series, known for its large scale events for humanities research in social robotics. RP2024 highlights the urgency of closer collaboration between tech experts and societal experts to establish research-based regulations. The conference will welcome 80-100 talks in plenaries, special workshops, and parallel sessions of reviewed research papers. Virtual attendance is made possible for those unable to attend in person. Interested parties are invited to submit their papers on the conference topics. Key dates to note: Deadline for workshop/panel proposal submissions is January 31, 2024. Deadline for short papers and posters is February 15, 2024. More information at cas.au.dk/en/robophilosophy/conferences/rpc2024.

On Beauty

On 17 October 2023, Oliver Bendel published a little book entitled “ON BEAUTY” in which he posed 26 questions about beauty to GPT-4. The language model’s answers show the direction in which it has developed. They reveal much of the world knowledge it has accumulated. But they are also unassailable and quite general. To some questions that are not usually asked, it has downright woke answers. Only questions about the measurability of beauty or the connection between beauty and evolution elicit some concessions from the chatbot and text generator. Questions and answers are illustrated with images generated by DALL-E 3. They show beautiful people, beautiful animals, beautiful things, beautiful landscapes. Some are highly expressive art, others are kitsch. Like its predecessor “ARTIFACTS WITH HANDCAPS” (24 September 2023), this little book can be downloaded for free. Oliver Bendel has been writing experimental literature for 40 years, from concrete poetry and mobile phone novels to poems in the form of 2D and 3D codes and AI-generated texts. He has toured the Netherlands with his mobile phone novels and poems on behalf of two Goethe Institutes. The standard reference “Die Struktur der modernen Literatur” (Mario Andreotti) devotes two pages to his work (Photo: DALL-E 3).

Alpha Mini as a Learning Partner

The paper “Alpha Mini as a Learning Partner in the Classroom” by Prof. Dr. Oliver Bendel and Andrin Allemann was accepted at ICSR 2023. The philosopher of technology and information systems specialist has been researching conversational agents for a quarter of a century, and for many years specifically on embodied conversational agents and social robots. Andrin Allemann developed a learning application for children as part of his final thesis at the FHNW School of Business, supervised by Oliver Bendel. The goal was to create one with a low-cost social robot that has the same motor skills as NAO and the same knowledge transfer capabilities as Pepper (which is equipped with a display). The choice fell on the small Alpha Mini from Ubtech. It was possible, with some technical effort, to pair it with an external device, a Microsoft Surface. This runs an educational game suitable for primary school teaching. ICSR 2023 will take place in Qatar in December 2023. Along with Robophilosophy, it is one of the most important conferences for social robotics worldwide.

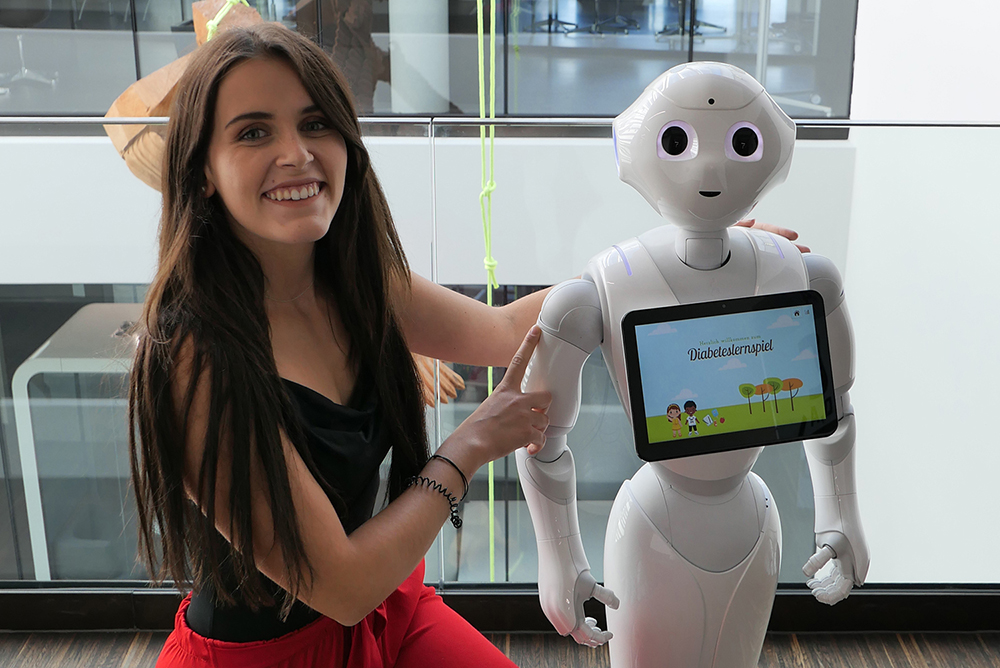

Pepper as a Learning Partner

The paper “Pepper as a Learning Partner in a Children’s Hospital” by Sara Zarubica and Prof. Dr. Oliver Bendel was accepted at ICSR 2023. The technology philosopher and information systems specialist has been researching conversational agents for a quarter of a century, and for many years specifically on embodied conversational agents and social robots. His projects often involve building prototypes, either in the context of machine ethics or with reference to buzzwords such as “AI for Good” or “AI for Well-being” (which he himself, however, views critically). Sara Zarubica developed a learning application for children with diabetes that runs on a social robot as part of her thesis at the FHNW School of Business, supervised by Oliver Bendel. According to the abstract, the goal was to make Pepper an interactive learning partner with which the children can “practise estimating carbohydrate amounts in meals and thus build a foundation for the daily management of type 1 diabetes mellitus” (abstract). The children complete the learning game on the social robot’s display and receive gestural and verbal feedback from it. In 2022, Oliver Bendel was at the ICSR 2022 in Florence together with Marc Heimann, where they presented the morality module. The ICSR 2023 will take place in Qatar in December 2023. Along with Robophilosophy, it is one of the most important conferences for social robotics worldwide.