The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from 1-4 July 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. The conference is accepting workshop proposals until the workshop submission deadline. Approved workshops will be announced 2 weeks after the submission deadline. The deadline for submitting proposals for workshops is 1 December 2025. Further information is available at icsr2026.uk.

Call for Special Sessions at ICSR

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from July 1–4, 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. The conference is accepting special session proposals on a rolling basis until the submission deadline. Approved sessions will be added here as they are confirmed. The deadline for submitting proposals for special sessions is 1 December 2025. Further information is available at icsr2026.uk. Two special sessions have already been accepted, namely “SS01: Cultural Robotics” and “SS02: Participatory Futures in Social Robotics and AI”.

ICSR + ART 2026 at Senate House

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from July 1-4, 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. This year’s edition will be hosted at Senate House, part of the University of London, located in the heart of central London. The venue is within walking distance of many of the city’s main attractions and stations. Senate House has appeared in several famous films and series, including “Batman Begins”, “The Dark Knight Rises”, “Fast & Furious 6″, “No Time to Die” (a James Bond film), “Nineteen Eighty-Four”, and the Netflix series “The Crown”. The building inspired George Orwell’s depiction of the Ministry of Truth in “1984″. Further information is available at icsr2026.uk (Photo: Bastique/CC BY-SA 3.0).

GPT-AfterDark is Coming

According to several media reports on 15 October 2025, ChatGPT is set to get an erotic function. This is likely to include features such as dirty talk – via text and voice – but possibly also instructions for all kinds of positions and tips and tricks for sex toys and sex robots. This follows in the footsteps of other chatbots such as Replika. However, these often have an avatar to make them irresistible. This is not the case with ChatGPT, apart from the small round tiles of the GPTs, the “custom versions” that anyone can easily create. Among these, incidentally, is a SexGPT by Dominick Pandolfo – ‘Provides sexual health information’, so quite harmless. Artificial Life’s virtual girlfriend already existed at the turn of the millennium, also in linguistic and visual form. If OpenAI does not improve this, users will build something themselves, which is already being done today, albeit not necessarily in a sexual sense. Meshy AI and Co. can be used to generate and animate three-dimensional avatars. It will be interesting to see whether the German ChatGPT version uses gender language in its erotic function – as it does in the default setting. Some people may find this arousing, others may not. When asked what this version of ChatGPT could be called, the chatbot itself suggested: ChatGPT Red, GPT-AfterDark, or DeepLure. If that doesn’t turn you on, there’s no helping you.

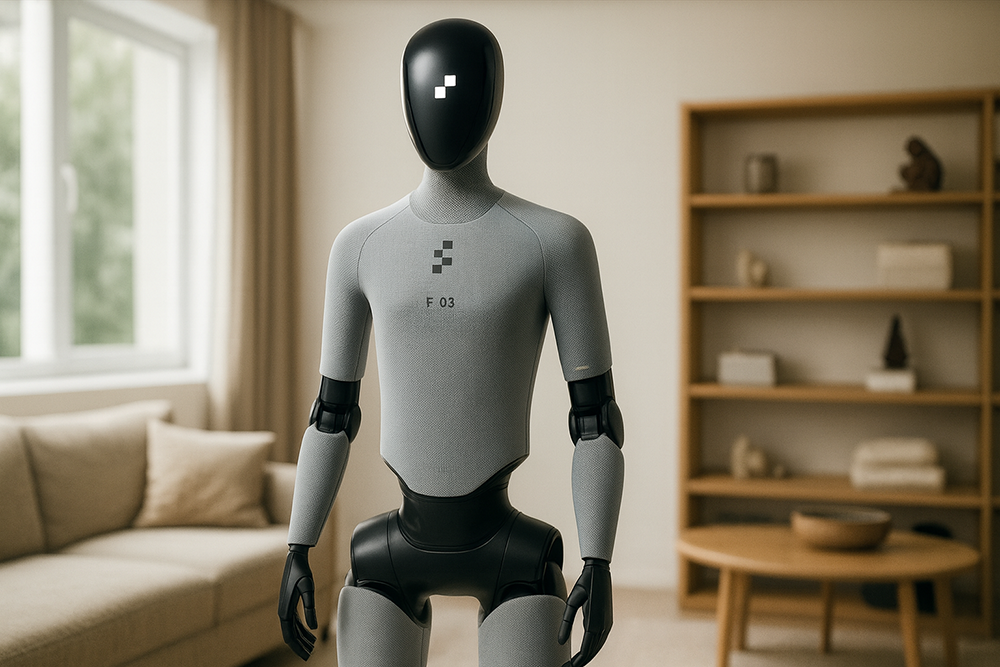

Launch of Figure 03

On October 9, 2025, Figure unveiled its latest general-purpose robot, called Figure 03. With the help of the Helix AI platform, it can understand speech, analyze and interpret its surroundings, and take appropriate action. Figure 03 is designed for use in the home, where it can do laundry or load and unload the dishwasher. It is covered with soft textiles instead of hard, machine-finished parts. As with earlier models, the face is mask-like and unapproachable. It has integrated palm cameras and fingers with tactile sensors. The company writes: “Each fingertip sensor can detect forces as small as three grams of pressure – sensitive enough to register the weight of a paperclip resting on your finger. This precision enables Helix to distinguish between a secure grip and an impending slip before it occurs, allowing fine-grained, dexterous control over fragile, irregular, or moving objects.” (Website Figure) Hundreds of thousands of models are to be produced in the coming years. Further information is available at www.figure.ai/figure (Image: ChatGPT/4o Image).

Unitree Robots in Venice

At the 2025 Architecture Biennale in Venice, Unitree robots are part of both an installation and a performance. While one robot paints on a canvas, the other plays music. However, you have to be lucky to see the performance, because most of the time the humanoid robots stand still or hang in the air. This is disappointing for visitors, who turn their attention to Alter3, which seems to know no fatigue. Another performance featuring carving cobots is also not on display, according to the media, for safety reasons. On the screen, you can see the arms reaching out to the audience. Although such proximity is normal for a cobot, individual visitors, such as children, can be unpredictable. So, in the hall where the robots are concentrated, there is at least one negative example of how a robot park should not be implemented. Information about the Biennale Architettura is available on the website.

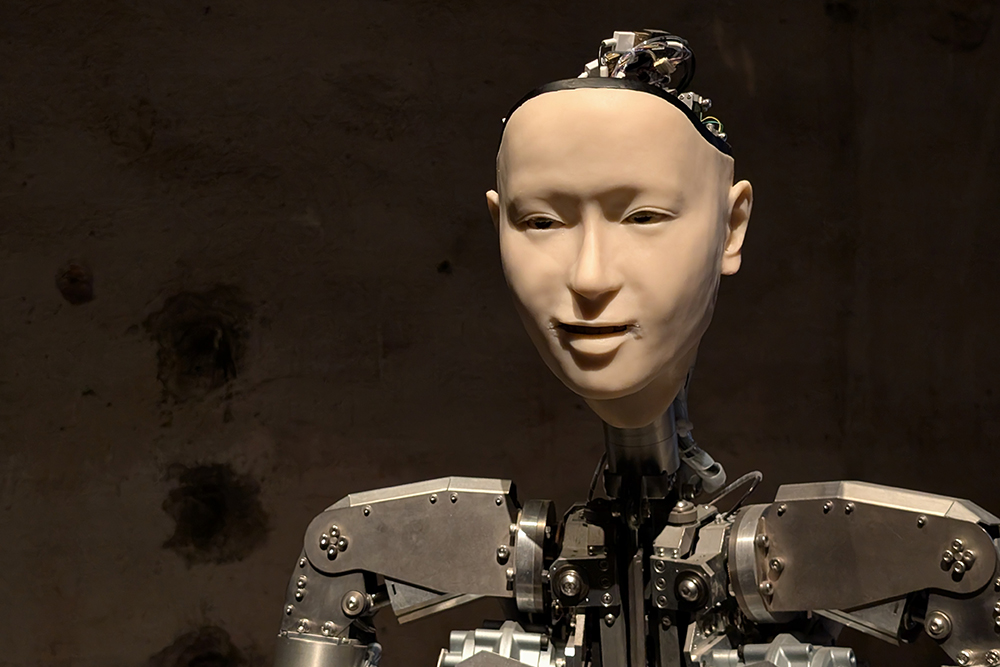

Alter3 in Venice

The installation entitled “Am I a Strange Loop?” will be on display at the 2025 Architecture Biennale in Venice. It raises the question of whether artificial intelligence can develop a form of self-awareness. The installation features the humanoid robot Alter3, which has mimic, gestural, and verbal abilities. It uses GPT-4 or GPT-5. Visitors can communicate with it in different languages via a microphone. The installation draws on ideas from physicist, computer scientist, and cognitive scientist Douglas Hofstadter, who assumed that consciousness arises when a system reflects on itself. Alter3 is an impressive robot with a silicone face and silicone hands, but otherwise has a machine-like presence. GPT-4, GPT-5, or other language models cannot create either world consciousness or self-awareness.

Initial Thoughts on Wearable Social Robots

Wearable social robots are very small yet extremely powerful systems that can be worn around the neck, on the body, or in a shoulder bag or handbag. They are not only companions to humans, but become part of them by expanding their senses and means of expression. The article entitled “This robot suits you well!” (subtitle: “On the phenomenon of wearable social robots”) by Oliver Bendel defines the term “wearable social robots”, presents areas of application, and discusses social and ethical challenges. Recommendations for developers and users are also provided. It becomes clear that wearable social robots represent novel tools and extensions or enhancements of humans, whose capabilities go beyond those of apps on smartphones. The article was published on September 25, 2025, in Wiley Industry News, not only in German but also in English. It can be accessed at www.wileyindustrynews.com/de/fachbeitraege/dieser-roboter-steht-ihnen-aber-gut or www.wileyindustrynews.com/en/contributions/that-robot-suits-you-well.

ICSR + Art 2026 in London

Oliver Bendel on Wearable Social Robots

At the last session of the ICSR on September 12, 2025, Oliver Bendel presented his full paper titled “Wearable Social Robots for the Disabled and Impaired”. He began by defining the term wearable social robots, which he sees as a special form and combination of wearable robots and social robots. One example is AIBI, a small robot that he briefly wore around his neck during the talk. Wearable social robots can include functions for games and entertainment, information and learning, navigation and description, and combating loneliness and anxiety. Potential user groups include pupils and students, prison inmates, astronauts, and disabled and impaired persons. Franziska and Julia demonstrated in videos how they use AIBI as a companion and for social support. With this paper, Oliver Bendel continued his work in the field of inclusive AI and inclusive Robotics. The ICSR is one of the leading conferences for social robotics worldwide, and its 17th edition took place from September 10 to 12, 2025, in Naples, Italy. Mariacarla Staffa (University of Naples Parthenope, Italy), John-John Cabibihan (Qatar University, Qatar), and Bruno Siciliano (University of Naples Federico II) served as the main organizers. Over the course of the three days, 300 participants attended, contributing once again to the advancement of social robotics.