The GROUND workshop (advancing GROup UNderstanding and robots‘ aDaptive behavior) is back for its third edition and will take place on June 30, 2025, as part of the IAS 19 Conference in Genoa, Italy. The keynote speeches will be given by Prof. Dr. Oliver Bendel, FHNW School of Business, and Prof. Dr. Silvia Rossi, University of Naples. The talk by the technology philosopher and business information scientist from Zurich entitled “Robots, chatbots, and voice assistants in the classroom” is summarized as follows on the Ground website: “Chatbots, voice assistants, and robots – both programmable machines and social robots – have been used in learning for decades. Prof. Dr. Oliver Bendel from the FHNW School of Business in Switzerland presents his own projects from 15 years. Some of his chatbots and voice assistants, such as GOODBOT, BESTBOT, and SPACE THEA, recognized user problems and responded appropriately. They showed empathy and emotion. Pepper was used as an educational application for children with diabetes, and Alpha Mini as an educational application for elementary schools. Chatbots for dead, endangered, and extinct languages such as @ve, @llegra, and kAIxo can be integrated into learning environments for all ages. Today, the technology philosopher and information systems expert mainly uses GPTs such as Social Robotics Girl and Digital Ethics Girl in his courses. They can receive and answer questions from several students at the same time, even if they are asked in different languages. They are specialists in their field thanks to prompt engineering and retrieval-augmented generation (RAG). In his talk, Oliver Bendel will ask how chatbots, voice assistants, and social robots will be designed as adaptive systems for multi-user settings in the future. These capabilities are especially important in the classroom.” Further information is available at ground-hri.github.io/workshop/.

GROUND Workshop in Genoa

The GROUND workshop (advancing GROup UNderstanding and robots’ aDaptive behavior) is back for its third edition and will take place on June 30, 2025, as part of the IAS 19 Conference in Genoa, Italy. This full-day event focuses on robotics in multiparty scenarios and human-robot interaction in group settings. It offers a unique platform for researchers and practitioners to share ideas, explore new approaches, and connect with a passionate community working at the intersection of social interaction and robotics. Participants can look forward to inspiring keynotes and talks by Prof. Dr. Silvia Rossi, Prof. Dr. Oliver Bendel, and Dr. Isabel Neto, along with a hands-on tutorial led by Lorenzo Ferrini. The workshop invites short contributions (2–4 pages plus references) that present innovative strategies for advancing group-robot interaction. Key dates to keep in mind: the submission deadline is May 31, 2025; notifications of acceptance will be sent out on June 17, and the final camera-ready papers are due by June 22. For more information, visit the workshop website, the IAS-19 conference page, or go directly to the submission portal. Questions can be directed to: groundworkshop@gmail.com.

Manipulated Chatbots as Munchausen Machines

In 2013, Prof. Dr. Oliver Bendel came up with the idea for his LIEBOT, also known as Lügenbot. On September 11, 2013, he published an article titled “Der Lügenbot und andere Münchhausen-Maschinen” in the magazine CyberPress. More articles and contributions followed until a prototype was implemented in 2016. Kevin Schwegler, then a student of the philosopher of technology, was responsible for this work. He developed a chatbot that transformed truthful statements into false ones using seven different strategies. In the summer of 2016, for example, LIEBOT claimed that Donald Trump was the President of the United States. To make this statement, it had used information from Yahoo in a multi-step process. The results of the project were documented in a paper titled “Towards Kant Machines” and presented in March 2017 at the AAAI Spring Symposia at Stanford University. One might argue that LIEBOT does not have intentions of its own and therefore does not lie in the strict sense. However, this intent was programmed into it. In a way, it lies on behalf of its creators. With this project, Oliver Bendel wanted to demonstrate that it is possible to build dialogue systems capable of spreading falsehoods. Today, such systems seem to be omnipresent in the form of LLMs. However, one has to look closely to discern the differences. In his book “300 Keywords Generative KI”, Oliver Bendel writes: “Hallucinating machines do not necessarily qualify as Munchausen machines in the strict sense, since there is no intent – or at least intent can hardly be proven.” Manipulated LLM-based chatbots, on the other hand, come very close to LIEBOT. ChatGPT and similar systems pursue a political agenda and exhibit an ideological tendency.

ICSR 2025 Extends Deadline for Submission

The ICSR is one of the leading conferences for social robotics worldwide. The 17th edition will take place from 10 to 12 September 2025 in Naples, Italy. The deadline for the submission of complete entries has been extended again. Papers should consist of 11 pages of body text plus references as appropriate. The most important conferences dates are: Full Paper Submission: April 30th, 2025; Full Paper Notification: June 6th, 2025; Camera-ready: June 30th, 2025; Paper Presentation Days at ICSR’25: September 11th and 12th, 2025. All dates are also listed on the website. “The conference theme, ‘Emotivation at the Core: Empowering Social Robots to Inspire and Connect,’ highlights the essential role of ‘Emotivation’ in social robotics. Emotivation captures the synergy between emotion and motivation, where emotions trigger and sustain motivation during interactions. In social robotics, this concept is key to building trust, fostering empathy, and supporting decision-making by enabling robots to respond sensitively to human emotions, inspiring engagement and action.” (Website ICSR) Participants will meet for two days at the Parthenope University of Naples and for the third day at the Città della Scienza conference center. All buildings and rooms are also listed on the website.

Image Synthesis from an Ethical Perspective

The article “Image Synthesis from an Ethical Perspective” by Prof. Dr. Oliver Bendel was published as an electronic version in the journal AI & SOCIETY on September 27, 2023. It addresses the ethical implications of image generators, i.e., a specific form of generative AI. It can now also be found in the current print edition from February 2025 (Volume 40, Issue 2). From the abstract: “Generative AI has gained a lot of attention in society, business, and science. This trend has increased since 2018, and the big breakthrough came in 2022. In particular, AI-based text and image generators are now widely used. This raises a variety of ethical issues. The present paper first gives an introduction to generative AI and then to applied ethics in this context. Three specific image generators are presented: DALL-E 2, Stable Diffusion, and Midjourney. The author goes into technical details and basic principles, and compares their similarities and differences. This is followed by an ethical discussion. The paper addresses not only risks, but opportunities for generative AI. A summary with an outlook rounds off the article.” A lot has happened with image generators since 2023. The new one from OpenAI now also allows photorealistic images, and it has fewer problems with average-looking people – DALL-E 2 and 3 favored beauty over mediocrity and ugliness. The article can be downloaded from link.springer.com/article/10.1007/s00146-023-01780-4 (Image: DALL-E 3).

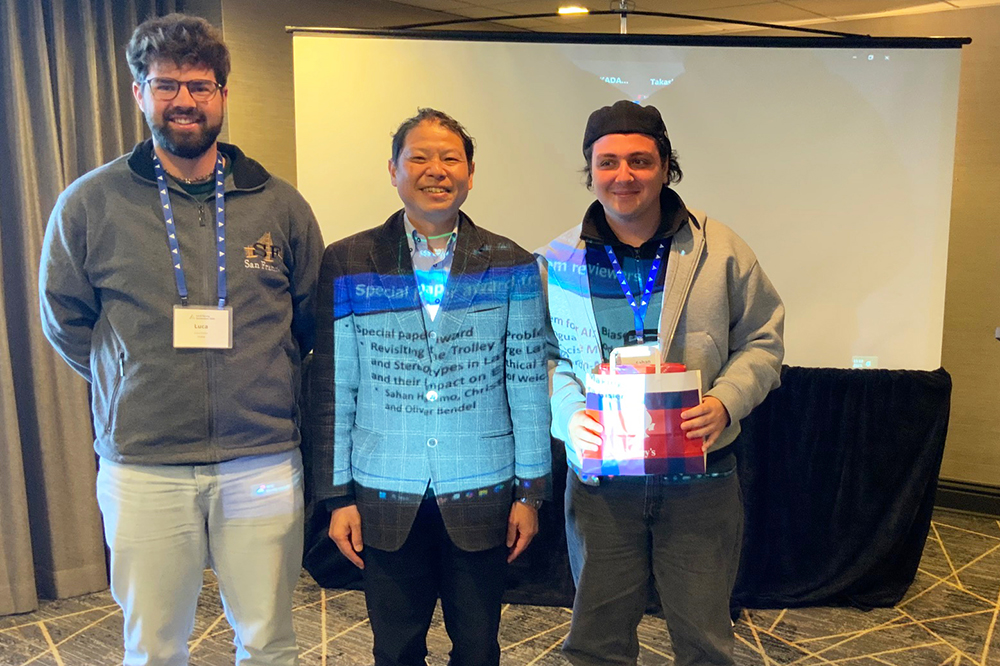

Revisiting the Trolley Problem for AI

The paper “Revisiting the Trolley Problem for AI: Biases and Stereotypes in Large Language Models and their Impact on Ethical Decision-Making” by Şahan Hatemo, Christof Weickhardt, Luca Gisler, and Oliver Bendel received the Special Paper Award from the reviewers of the AAAI 2025 Spring Symposium “Human-Compatible AI for Well-being: Harnessing Potential of GenAI for AI-Powered Science”. The three bachelor students from the FHNW School of Computer Science gave their presentation on site in San Francisco as part of the AAAI 2025 Spring Symposia. Prof. Dr. Bendel from the FHNW School of Business had given them feedback on the project and placed it in the context of machine ethics. He has been involved in the well-known and popular conference, which usually takes place at Stanford University, since 2016. This time, he was keeping his fingers crossed from Italy. The proceedings of the AAAI 2025 Spring Symposia will be published shortly.

This Robot Could Spy on You

As reported by the CyberInsider platform on March 24, 2025, security experts have discovered a pre-installed, undocumented remote access tunnel in the Unitree Go1 robot dogs that enables remote control and network access. The Unitree Go1 can be found in numerous companies and universities. During their tests, the researchers were able to list all connected devices and their IP addresses and access these devices. They were also able to use the robotic quadruped’s cameras for live monitoring. The article states: “Perhaps most concerning is the implication of deliberate design. The tunnel is not merely an over-looked debug utility; it appears fully integrated into the boot process and enabled by default.” (CyberInsider, 24 March 2025) Users should immediately isolate their devices from critical internal networks, change SSH credentials, and disable the tunnel service. More information is available on the platform.

Start of the VISUAL Project

The kick-off meeting for the VISUAL project took place on March 20, 2025. It was initiated by Prof. Dr. Oliver Bendel from the FHNW School of Business. “VISUAL” stands for “Virtual Inclusive Safaris for Unique Adventures and Learning”. There are webcams all over the world that show wild animals. Sighted people can use them to go on a photo safari from the comfort of their sofa. Blind and visually impaired people are at a disadvantage. As part of Inclusive AI – an approach and a movement that also includes apps such as Be My Eyes with the Be My AI function – a solution is to be found for them. The aim of the project is to develop a prototype by August 2025 that enables blind and visually impaired people to have webcam images or videos of wild animals described to them. The system analyzes and evaluates them with the help of a multimodal LLM. It presents the results in spoken language via an integrated text-to-speech engine. As a by-product, poaching, bush and forest fires and other events can be detected. The project is likely to be one of the first to combine inclusive AI with new approaches to animal-computer interaction (ACI). Doris Jovic, who is completing her degree in BIT, has been recruited to work on the project.

Sponsorship for the ICSR 2025

Join us as a sponsor for the 17th International Conference on Social Robotics & AI, taking place on 10-12 September 2025 at Naples, Italy. This prestigious event brings together researchers, leading experts, innovators, and thought leaders in the fields of robotics, AI, and human-robot interaction. Don’t miss the opportunity to align your brand with cutting-edge advancements. We offer different sponsorship/exhibition packages, all information is available at the page: icsr2025.eu/sponsor2/. If you are interested or have any questions, don’t hesitate to contact us at info@icsr2025.eu with the subject line tag [Sponsorship]. The ICSR is one of the leading conferences for social robotics worldwide. Participants will meet for two days at the Parthenope University of Naples and for the third day at the Città della Scienza conference center. More information on icsr2025.eu.

About Inclusive AI

The Gabler Wirtschaftslexikon is the largest economics encyclopedia in the German-speaking world. In March 2025, Oliver Bendel published an article on inclusive AI in this reference work. The first part reads as follows: “Inclusive AI aims, on the one hand, to combat phenomena in artificial intelligence (AI) that have an exclusionary character – such as bias, hallucination, hate speech, and deepfakes – and, on the other hand, to strengthen applications with an inclusionary character in order to support those affected. One meaning is associated with terms like “responsible AI”, “explainable AI”, and “trustworthy AI”, while the other aligns with concepts such as “AI for good” or “AI for wellbeing”. Overall, this falls under the umbrella of “ethical AI”, though this term is also used in marketing contexts. In 2025, the World Economic Forum (WEF) pointed to the digital divide, asking: “How do we ensure that the growth of AI doesn’t leave people behind and is truly inclusive?” Inclusive AI can be associated with generative AI as well as with other forms of artificial intelligence.” The full article is available at:

wirtschaftslexikon.gabler.de/definition/inclusive-ai-171870.