The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from 1-4 July 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. As part of this edition, the ICSR 2026 Competition invites visionary concepts and prototypes for social robots that collaborate, care, and connect with people beyond the laboratory. Designers, engineers, artists, researchers, and pupils or students (school, college, and university) are invited to submit projects ranging from functional solutions to artistic or hybrid works. The competition features two categories: the Robot Design Competition, focusing on innovation in functionality, interaction, and application; and the Robot Art Competition, highlighting creative fusions of fashion, art, performance, and robotics. Hybrid projects may apply to both awards. Each entry must be described in a summary of up to two pages (preferably following Springer LNAI formatting), including an abstract of no more than 50 words and sufficient detail to judge novelty and impact. A single optional video link (maximum three minutes) and images or renderings are encouraged. Submissions should indicate whether they apply for the Design Award, the Art Award, or both, and be uploaded via the competition form at: icsr2026.uk/competition/. The competition submission deadline is 1 March 2026; finalists will be notified on 15 April 2026, and winners will be announced on 3 July 2026 during the closing ceremony of ICSR 2026.

AI Systems Harm the German Language

Users who translate texts from English or another language into German and are not native speakers of the target language should be cautious when using services such as DeepL and ChatGPT. 1. For both, the default setting is not the standard language, as one might assume, but a special language that is rejected by the majority of the language community and does not follow the official rules. These are determined for all German-speaking countries by the Rechtschreibrat. DeepL and ChatGPT follow their own rules or the inconsistent ideas of activists. The German language generated by DeepL and ChatGPT is often dysfunctional, incorrect, and imprecise. Formal inaccuracies can lead to inaccuracies in content. 2. If AI systems do not know words, they may simply replace them with completely different ones. In one test, DeepL translated “Animal-Computer Interaction” as “Mensch-Computer-Interaktion” (“Human-Computer Interaction”). This made the text factually incorrect. 3. Overall, especially with ChatGPT, English language structures are transferred to German. This results in unnatural-sounding lists, unlinked compounds (“Deep Learning Modelle” or “Deep Learning-Modelle” instead of “Deep-Learning-Modelle”), and unnecessary or incorrect hyphens (“nicht-amtliche Regeln” instead of “nichtamtliche Regeln”).

About Authentic Laughter

From November 2025 to February 2026, Sahan Hatemo of the FHNW School of Computer Science, Dr. Katharina Kühne of the University of Potsdam, and Prof. Dr. Oliver Bendel of the FHNW School of Business are conducting a research study. As part of this project, they are launching a sub-study that includes a short computer-based task and a brief questionnaire. Participants are asked to listen to a series of laughter samples and evaluate whether each one sounds authentic or not. The task involves 50 samples in total and typically takes about ten minutes to complete. Participation is possible via PC, laptop, or smartphone. Before starting, participants should ensure that their device’s sound is turned on and that they are in a quiet, distraction-free environment. The computer-based task and the brief questionnaire can be accessed at research.sc/participant/login/dynamic/3BE7321C-B5FD-4C4B-AF29-9A435EC39944.

Fundamentals of Animal-Machine Interaction

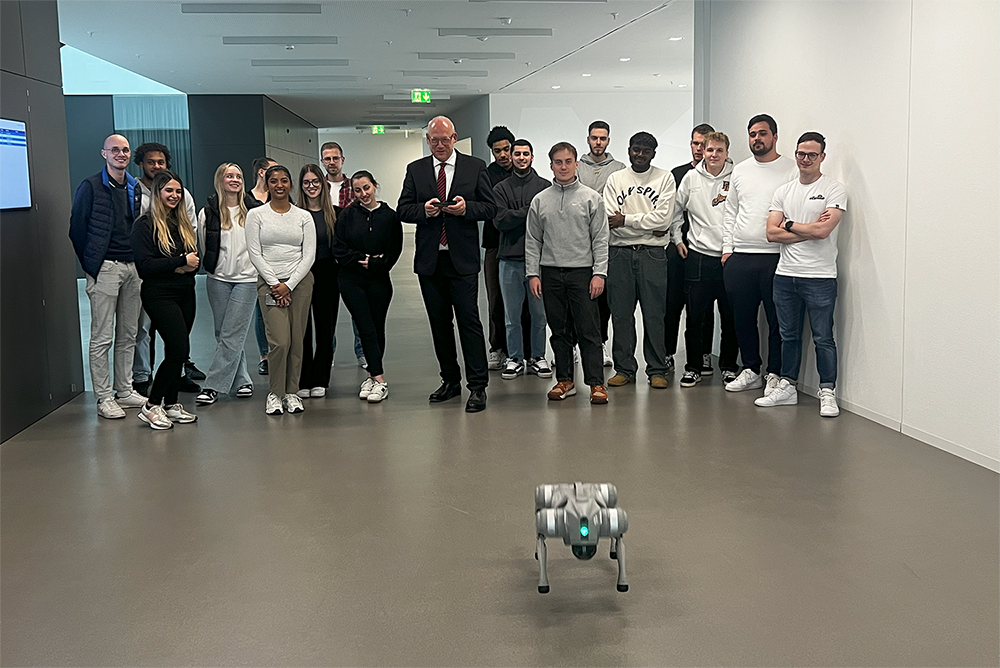

“Just.Us + Animal Welfare” is a lecture series organized by Department 10 Veterinary Medicine to promote animal welfare at Justus Liebig University Giessen. On November 12, 2025, Prof. Dr. Oliver Bendel gave a lecture on “Bao meets Pluto: Grundlagen und Beispiele der Tier-Maschine-Interaktion” (“Bao meets Pluto: Fundamentals and examples of animal-machine interaction”). Animal-machine interaction deals with the encounter and coexistence of animals and machines – from classic devices to vehicles, aircraft, and agricultural machinery to networked, autonomous robots and AI systems. The focus is on perception through sensors and senses, interaction and communication between animals and machines, and the question of how these encounters can be designed technically, organizationally, and ethically in such a way that risks for animals are reduced and potential for them and for humans is tapped. In his lecture, Oliver Bendel laid out the fundamentals of animal-machine interaction and described prototypes and projects. He also outlined what is possible and to be expected in this field of research in the coming years, for example in connection with robotic quadrupeds and bipeds. The online lecture was followed by 660 listeners. Further information is available at www.uni-giessen.de/de/fbz/zentren/icar3r/akademie/justus.

The Hippo in the Mud

On November 10, 2025, the article “There’s a Large Hippo Resting in the Mud” by Oliver Bendel and Doris Jovic was published introducing the VISUAL project. “VISUAL” stands for “Virtual Inclusive Safaris for Unique Adventures and Learning”. All over the world, there are webcams showing wild animals. Sighted people can use them to go on photo and video safaris comfortably from their sofas. Blind and visually impaired people are at a disadvantage here. As part of Inclusive AI, the project developed a prototype specifically for them. Public webcams around the world that are directed at wild animals are tapped. Users can choose between several habitats on land or in water. They can also select “Adult” or “Child” as a profile and choose a role (“Safari Adventurer”, “Field Scientist”, “Calm Observer”). When the live video is accessed, three screenshots are taken and combined into a bundle. This bundle is analyzed and evaluated by GPT-4o, an MLLM. The user then hears a spoken description of the scene and the activities. The project is likely one of the first to combine Inclusive AI with new approaches in Animal-Computer Interaction (ACI). The article was published in Wiley Industry News and can be accessed at: wileyindustrynews.com/en/contributions/theres-a-large-hippo-resting-in-the-mud. It should be noted that it is also available in German.

Start of the ECHO Project

On October 24, 2025, the kick-off meeting for the ECHO project took place at the FHNW School of Business. Two weeks later, on November 7, the proposal was approved. Project collaborator is BIT student Lucas Chingis Marty, who is writing his thesis on this topic. The initiator is Prof. Dr. Oliver Bendel. ECHO is an MLLM-based chatbot that introduces children, young people, and laypeople to the world of music. It can listen to, describe, and evaluate pieces and songs. To do this, it is equipped with a powerful audio analysis module. It refers to key, melody, and harmony, among other things. ECHO makes music accessible and understandable without requiring any prior knowledge. The aim is to promote curiosity, listening comprehension, and artistic taste. The prototype is expected to be available in February 2026.

Call for Workshops at ICSR

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from 1-4 July 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. The conference is accepting workshop proposals until the workshop submission deadline. Approved workshops will be announced 2 weeks after the submission deadline. The deadline for submitting proposals for workshops is 1 December 2025. Further information is available at icsr2026.uk.

Call for Special Sessions at ICSR

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from July 1–4, 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. The conference is accepting special session proposals on a rolling basis until the submission deadline. Approved sessions will be added here as they are confirmed. The deadline for submitting proposals for special sessions is 1 December 2025. Further information is available at icsr2026.uk. Two special sessions have already been accepted, namely “SS01: Cultural Robotics” and “SS02: Participatory Futures in Social Robotics and AI”.

ICSR + ART 2026 at Senate House

The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from July 1-4, 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. This year’s edition will be hosted at Senate House, part of the University of London, located in the heart of central London. The venue is within walking distance of many of the city’s main attractions and stations. Senate House has appeared in several famous films and series, including “Batman Begins”, “The Dark Knight Rises”, “Fast & Furious 6″, “No Time to Die” (a James Bond film), “Nineteen Eighty-Four”, and the Netflix series “The Crown”. The building inspired George Orwell’s depiction of the Ministry of Truth in “1984″. Further information is available at icsr2026.uk (Photo: Bastique/CC BY-SA 3.0).

GPT-AfterDark is Coming

According to several media reports on 15 October 2025, ChatGPT is set to get an erotic function. This is likely to include features such as dirty talk – via text and voice – but possibly also instructions for all kinds of positions and tips and tricks for sex toys and sex robots. This follows in the footsteps of other chatbots such as Replika. However, these often have an avatar to make them irresistible. This is not the case with ChatGPT, apart from the small round tiles of the GPTs, the “custom versions” that anyone can easily create. Among these, incidentally, is a SexGPT by Dominick Pandolfo – ‘Provides sexual health information’, so quite harmless. Artificial Life’s virtual girlfriend already existed at the turn of the millennium, also in linguistic and visual form. If OpenAI does not improve this, users will build something themselves, which is already being done today, albeit not necessarily in a sexual sense. Meshy AI and Co. can be used to generate and animate three-dimensional avatars. It will be interesting to see whether the German ChatGPT version uses gender language in its erotic function – as it does in the default setting. Some people may find this arousing, others may not. When asked what this version of ChatGPT could be called, the chatbot itself suggested: ChatGPT Red, GPT-AfterDark, or DeepLure. If that doesn’t turn you on, there’s no helping you.